Build Server 6 — An easier HCI?

The What

I want a whole “cloud” for myself and people around me. The services I want to host are:

- Authentik — Single Sign On and centralized accounts

- Incus — to provide VPS’s to people

- Coder — for remote development environments, but also Kali/Linux containers accessible via the browser

- Forgejo — Code forge, with CI/CD

- Nextcloud — a “cloud” with file sync, editing, and the like

- I am also considering alternatives, like OpenCloud or Cryptpad for the features I may want from nextcloud

- Element Server Suite — A complete discord alternative

- Something for Large Language Models

- Preferably with history per OIDC user

- Probably: https://artifacthub.io/packages/helm/open-webui/open-webui, see also

The Why

In my previous project, I attempted to deploy Openstack entirely with GitOps methodologies, using FluxCD.

I failed. Well, it didn’t fail, but I think I’ve spent too long on it and I need to move on.

Recently, I’ve discovered a project that simultaneously seems to be a distribution of OpenStack, Kubernetes, and Linux, called StarlingX.

Also:

StarlingX OpenStack is installed and managed as an FluxCD application.

Now, StarlingX, sadly, is not GitOps OpenStack. Configurations to the OpenStack application are done via the command line, or via helm value files. Perhaps I can clone the flux repo they use, but truthfully, I’m not going to worry about it for now.

StarlingX Attempt

There are multiple deployment guides, but I am interested in the “Simplex” install, which documents how to do an All in One install, using a single server, which is all I have.

I also care a lot about “For OpenStack only: Configure data interfaces for controller-0. Data class interfaces are vSwitch interfaces used by vSwitch to provide VM virtio vNIC connectivity to OpenStack Neutron Tenant Networks on the underlying assigned Data Network.”, since I only have one physical interface in use, and the other one will be virtual.

I started by obtaining an ISO, and attempting to install it in a virtual machine. The installer crashed becasue it wanted 500 GB of storage… which is fair I guess.At least it doesn’t crash because I don’t have enough CPU cores or ram.

Just kidding. It doesn’t boot. I don’t know why. Instead it stays stuck on “booting on hard disk”. This probably would work better on a physical machine, but I want to make sure it works on a virtual machine first.

But I install it on my physical server anyways. It doesn’t get an ip address via dhcp.

I understand why people like turnkey solutions, like ESXI now.

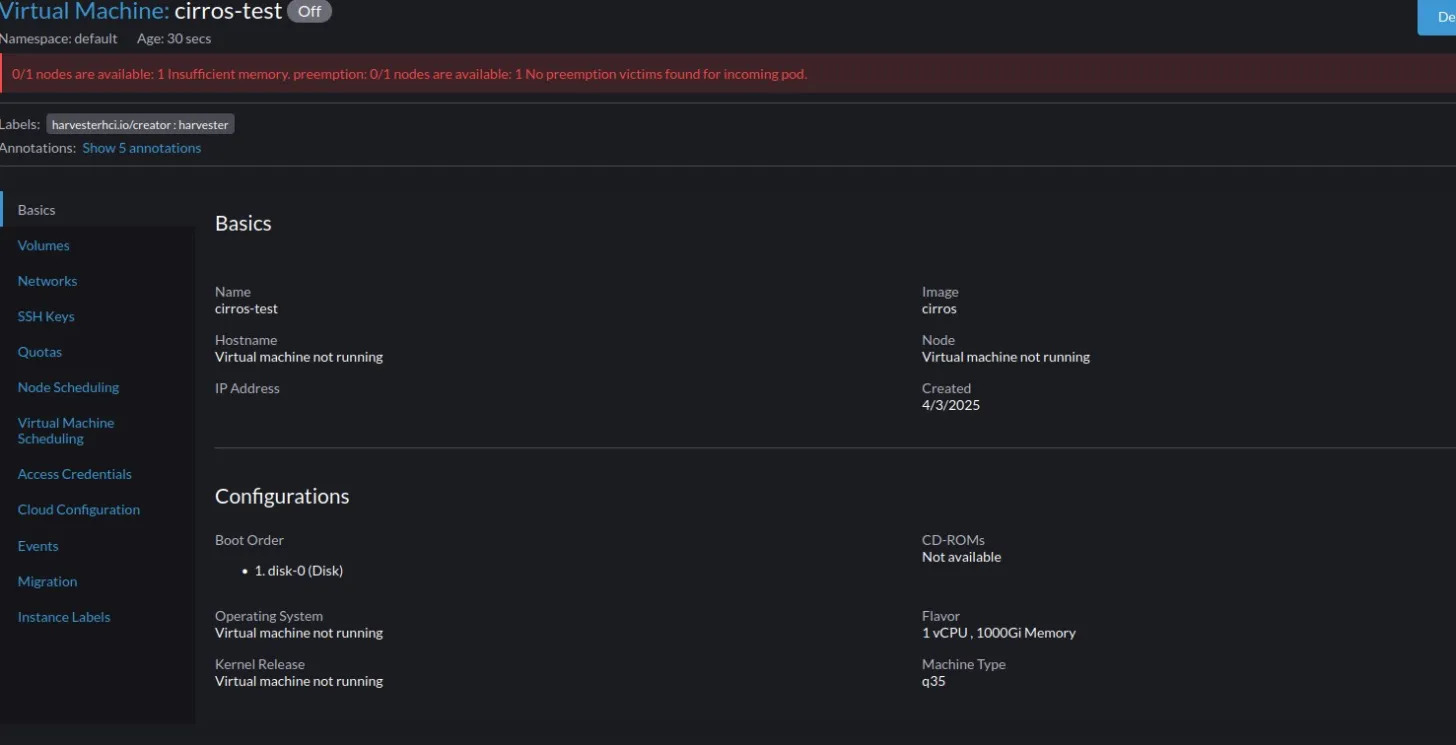

I look into another HCI solution: Suse Harvester. It’s actually a very good software, the UI is great and the install is easy. But if you want any kind of authorization and control over who can see what projects, you have to deploy rancher, which can then “consume” the harvester api and work with it. While excellent, I don’t really feel like having to set up rancher either on another server, or in a virtual machine.

In addition to that, I could not figure out how networking works, and how to set up a bridged network. I did deploy a virtual machine, although at first I accidentally gave it 1000 Gi of ram and it would not run because my machine does not have 1000 Gi of ram.

Also, I did find something funny though:

But at this point, I was done, and I began looking into something else, Incus.

Incus:

- Can be installed on top of another distro

- Has networking I understand, “simply” bridging

- Has authorization and authentication by restricting TLS certs to a project

- Not the complex SSO I want, but I need something working

Incus also has some nice features, like it can access a repository of LXC images (similar to Dockerhub), which is cool for testing out many different Linux distros.

Anyway, I did actually attempt to deploy it using Debian backports.

root@b718c16e3b2d:/etc/apt/sources.list.d# apt install incus

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

Some packages could not be installed. This may mean that you have

requested an impossible situation or if you are using the unstable

distribution that some required packages have not yet been created

or been moved out of Incoming.

The following information may help to resolve the situation:

The following packages have unmet dependencies:

incus : Depends: qemu-system-x86 (>= 1:8.0) but 1:7.2+dfsg-7+deb12u12 is to be installed

E: Unable to correct problems, you have held broken packages.Disappointing.

Instead, I followed the instructions on the github… mostly.

/etc/apt/sources.list.d/zabbly-incus-lts-6.0.sources

Enabled: yes

Types: deb

URIs: https://pkgs.zabbly.com/incus/lts-6.0

Suites: bookworm

Components: main

Architectures: amd64

Signed-By: /etc/apt/keyrings/zabbly.ascThis is a container, I will test a virtual machine install later on.

Installing Debian

https://wiki.debian.org/UEFI#Force_grub-efi_installation_to_the_removable_media_path — gotta do this in the installer

Networking

Switch from ifupdown/iproute2 to NetworkManager

/etc/network/interfaces

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

#allow-hotplug enp0s25

#iface enp0s25 inet dhcpComment out network interfaces.

/etc/NetworkManager/NetworkManager.conf

[main]

plugins=ifupdown,keyfile

[ifupdown]

managed=trueAnd changed “managed” to true instead of false.

Once I do this, I use cockpit to convert the main network interface into a bridge that also a ethernet interface.

So I got incus working. In a new/incognito tab, you can go to /ui/login, and follow the instructions to set up the UI.

I attempt to set up an Alpine instance, with the network attatched directly to my ethernet/bridge combo. Yeah, that was a bad idea, as it took my network offline.

Instead, I decided to create a veth, and then attatch that to my bridge (openstack style… ugh. I can’t believe I’m doing this again).

nmcli con add type veth ifname veth1 con-name veth1 veth.peer eth1

And then add the veth1 as a port to the eth0 bridge.

And then after that:

incus network create public0 bridge.external_interfaces=eth1 ipv4.address=none ipv4.dhcp=false ipv6.address=none ipv6.dhcp=false

This essentially creates a public network with no dns and dhcp. Instead, vitual machines will get their required address via dhcp — and they do!

But I realize something: I have two ethernet ports available to me, but I am only using one of them. I should use either a bond or a team to combine them into one, and then convert that merged interface into a bridge that also acts as an ethernet port.

Based on this comparison chart, it looks like Teaming is better.

root@thoth:~# speedtest

Retrieving speedtest.net configuration...

Testing from California Research and Education Network (130.166.90.206)...

Retrieving speedtest.net server list...

Selecting best server based on ping...

Hosted by Melbicom (Los Angeles, CA) [32.80 km]: 2.94 ms

Testing download speed................................................................................

Download: 93.59 Mbit/s

Testing upload speed......................................................................................................

Upload: 86.25 Mbit/sIt looks like my upload and download is capped at 100 Mbit/s. Let’s see if we can double that.

Okay, but it looks like teaming has been deprecated — but in Red Hat, or in general? My Debian system still seems to be able to set up teaming.

Okay, but now that I know I want bonding, which mode do I want? Some modes seem to require configuration on the switch, which rules them out, but I want best performance from the modes I can use.

Testing time!

I did two kinds of tests: one with speedtest, and another with speedtest & speedtest & speedtest, to see if more in parallel would have different results.

Better than would it would be alone, but it still seems bad.

| Bond Method Used | Single Speedtest (Mbit/s) | Triple Speedtest Down | Triple Speedtest Up |

|---|---|---|---|

| No Bond | 93.59 down, 86.25 up | 30.12, 29.33, 23.13 | 41.62 Mbit/32.98, 52.79 |

| balance-alb | 93.60 down, 91.53 up | ||

| balance-rr | 99.95 down, 161.43 up | 58.96, 59.08, 61.67 | 59.84, 57.80, 59.80 |

| balance-tlb | 93.60 down, 87.51 up | ||

| 802.3ad / LACP | 93 down, 90 up | 32, 32, 28 | 31, 27, 29 |

| balance-xor | 35, 37, 17 | 38, 35, 46 |

Okay, that’s enough enough speedtest tests, I should probably switch to iperf3 to test parallel connections properly.

iperf3 -c ping.online.net -p 5203

Iperf testing:

| Bond Method Used | Single Stream (mbit/s) | Multi stream (mbit/s total) |

|---|---|---|

| No bond | 77 (average), 94 (top) | |

| 802.3ad / LAP | 94 , 77 | 80, 70 (no improvement…) |

| Round Robin | 120 | 167, 143 |

| balance-alb | 75 | 75 |

I notice that the first packet seems to have a lot less information, and then it’s the stuff after that that usually hits near 100 mbit/s in speed.

(I also ran a quick check with iperf to make sure that both interfaces actually worked, which they did, they both worked and got 70 mbit/s of bandwidth.).

Round robin works by just rotating which interface gets the next packet. According to the internet, it can result in packets arriving out of order, which can lead to slower speeds than a single nic in some cases. I can’t find any posts on this that are newer than 3 years old, so I don’t know if it applies, but I wanted to avoid round robin for that reason.

People say the best mode is LACP, but I don’t get any performance improvement — however, according to Red Hat’s documentation, this mode does seem to require some configuration on the side of the network switch. Perhaps without that configuration can still do a bond and combine two interfaces into one, but you don’t get any performance out of it?

But when I run iperf on each of the physical interfaces simeuataneously, perhaps there is some kind of cap? Maybe the “two” ethernet interfaces in the wall are actually only one? But then why does round robin have consistent, better performance, over the theoretical 100 mbit/s limit?

I think it is the former issue:

root@thoth:~# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v6.1.0-32-amd64

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer2 (0)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Peer Notification Delay (ms): 0

802.3ad info

LACP active: on

LACP rate: slow

Min links: 0

Aggregator selection policy (ad_select): stable

System priority: 65535

System MAC address: 48:4d:7e:ec:54:2f

Active Aggregator Info:

Aggregator ID: 1

Number of ports: 1

Actor Key: 7

Partner Key: 1

Partner Mac Address: 00:00:00:00:00:00

Slave Interface: enp0s25

MII Status: up

Speed: 100 Mbps

Permanent HW addr: 48:4d:7e:ec:54:2f

Slave queue ID: 0

Aggregator ID: 1

Slave Interface: enp9s0

MII Status: up

Permanent HW addr: 48:4d:7e:ec:54:31

Aggregator ID: 2

Much of the information has been ommitted for brevity, but it seems that if your switch doesn’t support/isn’t configured, then the “Aggregator ID” will be different when it’s supposed to be the same, meaning only one ethernet interface is actually getting used.

Instead of LACP, I set up loadbalancer-alb and use ifstat to moniter network traffic:

enp9s0 enp0s25 incusbr0 bond0

KB/s in KB/s out KB/s in KB/s out KB/s in KB/s out KB/s in KB/s out

1.17 13.88 1.91 0.12 0.00 0.00 3.09 14.01

0.88 34.00 2.31 0.12 0.00 0.00 3.19 34.12

0.87 13.68 1.30 0.12 0.00 0.00 2.17 13.80

1.27 28.83 3.04 0.12 0.00 0.00 4.31 28.95

1.02 75.72 8.41 0.70 0.00 0.00 9.43 76.42

1.06 1645.20 36.86 1.95 0.00 0.00 37.91 1647.15

10.93 11556.15 461.64 2343.31 0.00 0.00 472.64 13900.86

662.03 12008.30 0.75 12008.21 0.00 0.00 662.78 24016.50

378.35 12006.45 224.79 12010.69 0.00 0.00 603.08 24017.15

1.47 11989.03 621.00 12012.35 0.00 0.00 622.53 24005.57

1.21 12008.51 617.68 12010.60 0.00 0.00 618.82 24020.51

0.90 12024.64 614.12 12012.30 0.00 0.00 615.02 24029.94

1.14 11998.92 545.02 11707.88 0.00 0.00 546.16 23708.20

0.72 12005.98 438.31 5809.23 0.00 0.00 439.04 17822.20

1.17 12006.77 491.46 8342.55 0.00 0.00 492.70 20340.93

1.11 5736.56 445.10 11851.00 0.00 0.00 446.14 17587.56

2.98 32.26 115.14 4567.08 0.00 0.00 118.12 4599.34Nice! When running two iperf tests at once, to two different servers, both are used at once, and I get double the network traffic! Both iperf tests report 80 mbit/s.

Then, I convert this bond to a bridge using cockpit. And the process of attempting to do so, the machine goes offline. It’s possible that you cannot convert a bond to the special bridge that also acts as a normal ethernet at the same time, or maybe you cannot use a bond as a bridge at all.

No, it does work. It’s just that the address was reasigned via dhcp, but I wasn’t sshing into the right port (I use port 22022 rather than the default 22).

And then: nmcli con add type veth ifname veth1 con-name veth1 veth.peer eth1

And then disable both from cockpit, but add veth1 as a port to the main bridge.

Then, eth1 can be used as a bridge for incus.

incus network create public0 bridge.external_interfaces=eth1 ipv4.address=none ipv4.dhcp=false ipv6.address=none ipv6.dhcp=false

And then it works.

It’s brought up to me by a friend, that there is a possibility that the limitation of speeds is not on my side, either the NIC or the cable, but instead on CSUN’s side, a 100 mbit/s limitation per mac address.

I test this by running three speedtests simultaneously, two on the side of CSUN’s internet, and one within a virtual machine that has it’s own Mac address. But the test only gets 200 mbit/s total from every device.

However, there is a real possibility I am using the wrong cables, and it’s the cables that are limiting. I will test

Incus Configuration

Firstly, I don’t want the “default” profile. It forces the incusbr0 network on virtual machines, whereas I want users to be able to select an for which network they want — either a public network or an internal network.

A big thing I want is for the layer8 project to have it’s own seperate set of networks and firewall rules, but still be able to have access to the public0 network I have created, so people can create public virtual machines.

From incus project edit layer8:

config:

features.images: "false"

features.networks: "false"

features.networks.zones: "true"

features.profiles: "true"

features.storage.buckets: "true"

features.storage.volumes: "false"

restricted: "true"

restricted.networks.access: public0, internal0

description: ""

name: layer8

used_by:

- /1.0/profiles/default?project=layer8There is no network named internal0 — instead I add one in after I create the project. With this, they would be limited to a single internal network, and public network.

Bugs

I have found two, but haven’t really bothered replicating them to report them properly.

- ISO’s uploaded via the webui seem to always be put into the dfeault project instead of whatever project I want them to be in .

Only oidc.claim as email works (nickname or preferred_username or username) do not work for cli authentication to incus. Although they do work for webui auth.- Fixed. Either a newer update, or the decision to set up OIDC and Openfga from the get go have fixed this for me.

Kubernetes (Again)

Next up is the rest of the services I want to host. I still want to do a Kubernetes cluster, with everything GitOps though, but having Incus opens up another oppurtunity: I could do a multi node Kubernetes cluster, in virtual machines, instead of a single node.

I know I still want SSO, and I actually want SSO for Incus as well. After watching looking at openfga, it doesn’t seem to be nightmarishly hard. I think I will do a one node kubernetes cluster, again with openebs, reusing that same flux config. But this time, the deployment will be done in a virtual machine, where I can snapshot the entire virtual machine, feed it the gpu, or the extra hard drive I have. ’

Nah. I think I’m just gonna deploy bare metal.

Disable RKE2 ingress-nginx:

/etc/rancher/rke2/config.yaml

disable:

# Yeah so apparently this was kind of important.

# - rke2-coredns

- rke2-ingress-nginxAnd then enable servicelb, to allow for the ingress service to actually serve on a port on the host:

systemctl edit rke2-server.service

### Editing /etc/systemd/system/rke2-server.service.d/override.conf

### Anything between here and the comment below will become the new contents of the file

[Service]

ExecStart=

ExecStart=/usr/local/bin/rke2 server --enable-servicelbFix NetworkManager: https://docs.rke2.io/known_issues#networkmanager

And then RKE2 is set up.

Flux:

flux bootstrap git --url ssh://moonpie@moonpiedumpl.ing:22022/home/moonpie/flux-config --branch=main --private-key-file=/home/moonpie/.ssh/moonstack --verbose

cat age.agekey | kubectl create secret generic sops-age --namespace flux-system --from-file=age.agekey=/dev/stdinBut none of this works, because for whatever reason, the remote git repo on my server does not have any commits, even after I push the git repo.

git push origin origin/main

Can I change this to be the default? But my stuff deploys at least. Authentik is up again.

Coder

coder is an open source platform for remote desktop environments. The thing they seem to be most excited about is the VScode based environemnts, but I care the most about the terminal access, and the webvnc based environments.

Also, looking at their helm chart values, and their OIDC documentation it seems that environment variables are used to configure many aspects of coder.

So, I need to set up secrets again.

sops --encrypt --encrypted-regex '^(values.yaml)$' codersecrets.yaml

To get secret content after it is applied, for testing:

kubectl get secret secret -n namespace -o jsonpath="{.data.values\.yaml}" | base64 -d

One hiccup: I fear taht I may need to set a list of valid email domains that it accepts as OIDC users, documented here.

But looking through the code, it looks like I can leave that config empty.

So here are my two secrets, that I am encrypting (without the sensitive data, of course):

apiVersion: v1

kind: Secret

metadata:

name: coder-pg-secrets

type: Opaque

stringData:

values.yaml: |

auth:

username: coder

password: "secretpassword"

database: coderapiVersion: v1

kind: Secret

metadata:

name: coder-secrets

type: Opaque

stringData:

values.yaml: |

coder:

env:

- name: CODER_PG_CONNECTION_URL

value: "postgres://coder:secretpassword@coder-db-postgresql.coder.svc.cluster.local:5432/coder?sslmode=disable"

- name: CODER_OIDC_CLIENT_SECRET

value: "OIDC Secret value here"sops --encrypt --encrypted-regex '^(values.yaml)$' --in-place filename.yaml

Whoops, this doesn’t deploy.

kubectl create namespace coder

It mostly worked, but I encountered an error:

Encountered an error running "coder server", see "coder server --help" for more information

error: access-url must include a scheme (e.g. 'http://' or 'https://)So I had to change that variable.

One thing I decided to do is store the terraform configuration files in my git repo. They won’t be applied automatically,

But eventually, I got it deployed. I even set up a workspace, using the starter “kubernetes” template.

It doesn’t come with noVNC, which I really want. I’m looking at adapting the code from the desktop container in combination with the Kali Linux webtop image to get a web based Kali Linux environmet for CTF’s, workshops, and similar events.

Okay, this is actually kinda difficult.

s6-overlay-suexec: fatal: can only run as pid 1

So the webtop docker containers work by launching a complex set of init scripts using s6, and if I try to start s6 via the /init script in a shell, then it complains about not being pid one. I might have to create a custom container to get around this, but I don’t want to do that.

Another thing I can try is creating a “docker mod”, an extension to Linuxserver’s docker containers that can be called via an environment variable. Usage example here

I could also try the enterprise-desktop image by Coder themselves, but I really do want that kasmvnc and kali image.

Although, it looks like I don’t have time, since I need an emphermal desktop UI up now, for the lab. The coder templates repo has a “better vnc” image, which is a kasmvnc based image. But, there is no prebuild docker image for me to work with. I tried to replace the Dockerfile image with kasmweb’s docker image: docker.io/kasmweb/kali-rolling-desktop:1.17.0. But it didn’t work.

Instead, I built that image, and pushed it to docker hub.

I play around with the image, and the terraform code, but when I try to deploy it, I get an error. It’s unclear, but I think the problem is that the kasmweb images I used, is designed for docker, so when I try to deploy it on kubernetes, things break.

What about something else: With the Linuxserver images, what if I run a second command in the container. It looks like kubernetes can do this via container lifecycle hooks. I can also reuse these in the terraform.

This seemed interesting, and after some tinkering with security contexts (I think s6 init wants to start as root), I almost have it working but code-server has an error:

/tmp/code-server/bin/code-server: exec: line 28: /tmp/code-server/lib/code-server-4.8.3/lib/node: not found

Sometimes the persistent volume claim that Coder would createw ould fail to delete, becuse the container wasn’t getting deleted. To fix, I had to shell into the container, and then pkill coder, to destroy the zombie process.

But, after using the normal code-server install script, which installs a debian package into the system it works. But, after much tinkering, I’ve found that I don’t actually need code-server right now, so I got rid of it.

The important thing is the coder agent. This agent seems to not properly die, which somewhat makes sense, because I start it via a container lifecycle poststart hook having it be an actual command.

The problem is that even though the container dies properly if I exec in and kill the coder process (or PID 1), it doesn’t work if I have these commands as a lifecycle hook.

I also discovered that “coder.agent.main.startup_script” and “coder.agent.main.init_script” are different. Init script is what downloads and starts the actual coder agent, whereas the startup script is just auxilliary stuff you want to run, like downloading and starting code-server.

So I need a way to start the init script, such that it detatches and disowns itself and then the kubernetes contanier considers itself as running. After some tinkering, I got this:

command = ["screen", "-dmS", "coder_screen", "sh", "-c", coder_agent.main.init_script]

Because the container comes with screen already, I can do this, and reuse an existing container for coder with minimal modifications or changes to the terraform overall (other options I were looking into were things like using a volume mount to add more s6 services, but it is difficult because the init_script is autogenerated, so I don’t actaully know what it is, and I also still do not know if it is a shell script or just a sequence of commands set up by EOF).

In the future, when I have more users, another thing I want to do is apply NetworkPolicies depending on the groups a user is, to control which networks that the workspace has access to.

I have made a Github Gist with my code.

Next up is to inject the environment variables via secrets.

It took some tinkering but I got it working in the repo. Apparently, the postgres process doesn’t actually need the secret url I gave it, so I got rid of it.

I have now migrated to cloudnativepg.

But after this, I want to migrate away from my little injection hack to something more stable. I should be able to create a configmap, and then mount that into the container. I started working on this, and it looks like the command is not truly templated, but instead that many of the things like the api key are generated from environment variables.

Okay, the next thing I want to do for coder is to use a CUDA image for Leela chess 0, so I and my friends can take advantage of the power analysis capabilities of nibbler on the go. There are a few ways I can do this.

I think the easiest way to do this is to use a webtop podman image on the host, and then install all of the relevant gpu drivers and cudnnn lc0 from the aur. Then, I can commit the image and push it.

abc@43d4afccc294:/var/cache$ history

1 sudo chown abc:abc config/

2 ls

3 cd config/

4 ls

5 sudo pacman -Sy git

6 git clone https://aur.archlinux.org/packages/lc0-cudnn-git.git

7 git clone https://aur.archlinux.org/packages/lc0-cudnn-git

8 git clone https://aur.archlinux.org/lc0-cudnn-git.git

9 cd lc0-cudnn-git/

10 ls

11 makepkg -si

12 lc0

13 cd ..

14 ls

15 git clone https://aur.archlinux.org/packages/nibbler-git

16 git clone https://aur.archlinux.org/packages/nibbler-gi.git

17 git clone https://aur.archlinux.org/nibbler-git.git

18 cd nibbler-git/

19 ls

20 makepkg -si

21 lco --help

22 sudo pacman -SccThis mostly works, except the lc0 backend wants a network file. I can probably put in a volume, where it has the

My concern, however, is image and model size. The image is currently very large, and I fear that loading cuda into ram for more than one container, may not duplicate the memory the way non gpu operations do.

Same goes for lc0’s model file.

I load it up in a podman container to test:

Latest version of CUDA supported by the driver: 12.4.0

WARNING: code was compiled with unsupported CUDA version.

CUDA error: CUDA driver version is insufficient for CUDA runtime version (../../src/neural/backends/cuda/network_cuda.cc:233)

error CUDA error: CUDA driver version is insufficient for CUDA runtime version (../../src/neural/backends/cuda/network_cuda.cc:233)I was using an archlinux container, and a debian host.

I updated the nvidia driver (and cuda) on the host. Also, apparently the cuda libraries are mounted into the container, I don’t think I need cuda, although I do probably need cudnn.

podman run -it --rm -v ".:/config/lc0" --gpus all --entrypoint bash --user abc docker.io/linuxserver/webtop:arch-kde

makepkg -s

pacman -S ./ --assume-installed cuda=13

Oauth2

Another thing is that Oauth2/OIDC was not working. It seems that my method of secrets did not work, so instead I have to do something else, “envfrom”.

https://artifacthub.io/packages/helm/coder-v2/coder

https://github.com/coder/coder/blob/main/helm/coder/values.yaml#L31

In addition to this, I also set CODER_PG_CONNECTION_URL. It seems that I wasn’t setting that, which caused me to lose all user data upon upgrades.

But after this, I attempted to get OIDC role sync working. But it doesn’t work, as it seems that it’s a premium feature. And in addition to that, I cannot control what permissions OIDC users have from the UI, so I have to authenticate as the admin user from the command line, and then I can use coder user edit-roles moonpiedumplings

Authentik

It’s deployed (see previous project), but I am going to record my steps here. Firstly, it looks like I should configure from the “Application” page, and use “Create with Provider” in order to create the whole set of necessary things.

There were some interesting hiccups though. The “Issuer url” for Coder should have a backslash, it seems to use strict string matching.

error: create oidc config: configure oidc provider: oidc: issuer did not match the issuer returned by provider, expected "https://sso.moonpiedumpl.ing/application/o/coder" got "https://sso.moonpiedumpl.ing/application/o/coder/"Also, for Incus, it looks like I need to enable device code, otherwise it won’t work.

I’m now stuck for Incus, because it seems that single sign on only works if the services are on the same domain/subdomain. Incus is on a different domain, so it fails. I need to proxy a service external to Kubernetes, to something inside Kubernetes.

This is actually more difficult than I thought it would be.

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: incus

annotations:

cert-manager.io/cluster-issuer: "letsencrypt-prod"

acme.cert-manager.io/http01-edit-in-place: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

namespace: openfga

spec:

rules:

- host: incus.moonpiedumpl.ing

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: incus-external-svc

port:

number: 8443

tls:

- hosts:

- "incus.moonpiedumpl.ing"

secretName: incus-tls

---

apiVersion: v1

kind: Service

metadata:

name: incus-external-svc

namespace: openfga

spec:

ports:

- protocol: TCP

port: 8443

targetPort: 8443

---

apiVersion: v1

kind: Endpoints

metadata:

name: incus-external-svc

namespace: openfga

subsets:

- addresses:

- ip: 130.166.79.34

ports:

- port: 8443So this works, but it doesn’t get an SSL certificate. It just uses the default kubernetes test certificate, rather than a real one. To fix this, you have to remove these two annotations:

acme.cert-manager.io/http01-edit-in-place: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"Before readding them. But don’t letsencrypt only last 90 days? But this works, however.

Next thing is, I want to automatically create projects in Incus when users are invited, so people can have a private (publicly hosted) VPS for whatever they want.

Right now, the most reasonable way to do this is to do a “stage” with an “expression policy”, which can execute arbitrary python code, and then I can make a API call with the python request library (using my private admin certificate).

Here are the Incus API docs on how to add a project. Here are the docs on how to bind an expression policy to a stage.

Okay apparently requests needs to read a certificate file in order to do TLS auth, and I don’t have one. I can probably get around this by creating a tempfile or something of the sort.

I may also need to make a python request to openfga… hmmm. I think this might be too complex. Firstly, let’s figure out what actually needs to be done:

- User does invite

- Create incus project named after user (via api call, that passes uses a tls certificate to authenticate)

- Add user to created project in openfga. I think this is giving a user either the operator or user relation to a project.

According to one of the test suites, it looks like the “user” of a project can interact with instances, but not edit them, whereas an operator has control over project level resources (but maybe is restricted by project restrictions.)

import tempfile

import json

ca_cert = ""

# Compressed backups?

incusdata = {

"config": {

"features.networks": "false",

"features.profiles": "true",

"restricted": {

"backups": "allow",

"networks.access": "public0, incus0"

}

},

"description": "Personal project for you",

"name": request.user

}

# Write the certificate to a temporary file since this will be ran inside authentik

with tempfile.NamedTemporaryFile(mode='w', delete=False) as tmp:

tmp.write(ca_cert)

tmp.flush()

response = requests.post('incus.moonpiedumpl.ing',json=data, verify=tmp.name)

openfga_api_key = ""

headers = {

"Authorization": f"Bearer {openfga_api_key}",

"content-type": "application/json"

}

openfgadata = {

"authorization_model_id": "stuff",

"tuple_key": {

"user": f"user:{requests.user}",

"relation": "member",

"object": "layer8"

}

}

openfgaresponse = requests.post('openfga.moonpiedumpl.ing/stores/$FGA_STORE_ID/check', json=openfgadata, headers=headers)

return trueI will have to collect some information, on the authorization model id, and the store id.

Okay, I won’t sweat a custom project yet. But I will automatically add invited users to the layer8 group.

Creating an invite was pretty painful. There appear to be steps required, but not documented on the invitation documentation page, where you also have to bind an “invitation” stage as the first stage in the actual invite flow. Otherwise things don’t work.

But after some tinkering, and giving up on an expression policy that affects both Incus and openfga, and focusing on openfga instead, I got something that works:

import json

openfga_api_key = "secretkey"

headers = {

"Authorization": f"Bearer {openfga_api_key}",

"content-type": "application/json"

}

data = {

"writes": {

"tuple_keys": [

{

"user": f"user:{request.user.username}",

"relation": "member",

"object": "group:layer8"

}

]

},

"authorization_model_id": "01JWHN0RS0QTJ4JJPJ659XCSPG"

}

openfgaresponse = requests.post('https://openfga.moonpiedumpl.ing/stores/01JWHMZPF85VE8DWYHKV90QTCT/write', data=json.dumps(data), headers=headers)

print(openfgaresponse)

return TrueWith this, users can sign up, and then they automatically get added as a “member” to the openfga object. Then they can log in via SSO to incus, and they have access to the layer8 project.

People have complimented me, saying that this setup is much, much easier to use than the previous setup, in which people had to import certificates.

But now it is time to update it. I think I will change it so that it happens not just on invites, but on logins, and it automatically adds and removes users.

So something like this:

import json

from authentik.core.models import Group

system_groups = ["authentik Read-only", "authentik Admins"]

all_groups = [group.name for group in Group.objects.all()]

all_groups = list(set(all_groups) - set(system_groups))

user_groups = [group.name for group in request.user.all_groups()]

openfga_api_key = "secretkey"

writes = {

"writes": {

"tuple_keys": [

{

"user": f"user:{request.user.username}",

"relation": "member",

"object": "group"

}

]

},

"authorization_model_id": "01K70P5HAQ8K2J3AN978F7Y1EC"

}

deletes = {

"deletes": {

"tuple_keys": [

{

"user": f"user:{request.user.username}",

"relation": "member",

"object": "group"

}

]

},

"authorization_model_id": "01K70P5HAQ8K2J3AN978F7Y1EC"

}

headers = {

"Authorization": f"Bearer {openfga_api_key}",

"content-type": "application/json"

}

for group in list(set(all_groups) - set(user_groups)):

deletes["deletes"]["tuple_keys"][0]["object"] = f"group:{group}"

requests.post('https://openfga.moonpiedumpl.ing/stores/01K70P3ZX9DJEHF85XDJS4PXN2/write', data=json.dumps(deletes), headers=headers)

for group in user_groups:

writes["writes"]["tuple_keys"][0]["object"] = f"group:{group}"

requests.post('https://openfga.moonpiedumpl.ing/stores/01K70P3ZX9DJEHF85XDJS4PXN2/write', data=json.dumps(writes), headers=headers)

return TrueAnd this works beatifully. I assign this expression policy to the Incus application, and now groups of a user are automatically synchronized when they login. And then I do:

fga tuple write --store-id $FGA_STORE_ID group:admins#member admin server:incus — this gives the admins authentik group admin access to the server.

And then

fga tuple write --store-id $FGA_STORE_ID group:layer8#member operator project:layer8

And so on for each project I want a group to have access to.

In the future, I am thinking I could use this for CTF’s and the like, where I have a group with read only access to an Incus project and then I have multiple projects for a PvP ctf.

I also created a custom policy to enforce ascii usernames:

if not request.context.get("prompt_data").get("username").isalnum():

ak_message("Only numbers and letters in usernames allowed")

return False

elif (len(request.context.get("prompt_data").get("username")) > 16):

ak_message("Username too long, 16 character max")

return False

else:

return TruePostgres update

So my authentik goes down, because I need to manually backup and restore the postgres database in order for an update to happen.

https://version-2023-10.goauthentik.io/docs/troubleshooting/postgres/upgrade_kubernetes

Except, since postgres updated automatically, it was crashing because it couldn’t launch with oldder database files. Firstly, I edited the pod and downgraded postgres.

But I got an ImagePullBackOff error, so I firstly listed all images running in the cluster, before seleccting the postgres image that was already there.

I have to get the secret myself:

cat /opt/bitnami/postgresql/secrets/postgres-password

It still says password authentication failed, but it does dump the file out.

I end with mv data data-15.

[moonpie@osiris ~]$ flux resume source git flux-system

[moonpie@osiris ~]$ flux reconcile helmrelease -n authentik authentikBut then I still can’t do things because I’m not root.

resources: {}

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

# runAsGroup: 1001

runAsNonRoot: false

# runAsUser: 1001So I edit the pod with that. Doesn’t work, apparently only a few things can be changed.

I follow this stackoverflow answer, and come up with this:

root@thoth:/var/lib/rancher/rke2/bin# ./runc --root /run/containerd/runc/k8s.io/ exec -t -u 0 f2aedf564b6f43fc895fc84aeba2e7e7a02b4f59ea835de13b76bcade462adca bash

I’m root indeed, but I still am struggling to connect to the postgres server, and it is asking for a password. Actually, I can give it one — but my password contains special characters, making it difficult to enter manually. I can change the password, and then.

I encode special characters in the url, but it doesn’t work:

I have no name!@authentik-postgresql-0:/bitnami/postgresql$ psql 'postgresql://postgres:secretpassword/@localhost/' psql: error: local user with ID 1001 does not exist

I could nuke authentik and reset it, but instaed, I think I am going to change the secret authentik uses for the password, while downgrading postgres at the same time.

So firstly I need to edit the sops file:

export SOPS_AGE_KEY_FILE=~/vscode/flux-config/age.agekey

sops edit --ignore-mac secrets.yaml –ignore-mac is needed because sops thinks the file has been tampered with.

But even after editing the file, it still asks for a password, and it doesn’t work.

/opt

I have no name!@authentik-postgresql-0:/opt/bitnami/postgresql/conf$ cat pg_hba.conf

host all all 0.0.0.0/0 md5

host all all ::/0 md5

local all all md5

host all all 127.0.0.1/32 md5

host all all ::1/128 md5The container doesn’t come with an editor. :/

Trying something else, there is a docker container, pgautoupgrade to automatically upgrade a database.

Firstly, I located the persistent volume in “/var/openebs” on the system. Then I ran the command:

root@thoth:/var/openebs/persistent/pvc-513c6021-afbb-410c-9c86-60fcf9d6089c# podman run -it -v "./data:/var/lib/postgresql/data" -e POSTGRES_PASSWORD=secretpassword -e PGAUTO_ONESHOT=yes docker.io/pgautoupgrade/pgautoupgrade:17-bookworm

The update works!

But authentik still fails to access postgres via the password. The logs for both simply say that password authentiation failed.

I also try some debug pods:

kubectl debug -n authentik authentik-server-5dcc9c45f5-thllc -it --image=busybox --target=server

kubectl debug -n authentik authentik-postgresql-0 -it --image=busybox --target=postgresqlInteresting and useful feature, but not helpful at the moment. :(

The Bitnami postgresql chart, used here, seems to have something to automatically update to the new passwords, but it requires the old passwords.

mv pg_hba.conf pg_hba.conf.bac

echo "local all all trust" > pga.conf (No text editor, and these config files don’t seem to be mounted.)

/opt/bitnami/postgresql/bin/pg_ctl reload

Then I can get in. Now I have to change the password manually.

psql -U postgres

And then:

postgres=# ALTER USER authentik WITH PASSWORD 'secretpassword';

ALTER ROLE

postgres=# ALTER USER postgres WITH PASSWORD 'secretpassword';

ALTER ROLE

postgres=#Then I put the original pg_hba.conf back, and run pg_ctl reload.

And it works!

Flows and Stages

Just some nice to have things that don’t seem to be there by default:

InvitationsPasswords on the same sign in page- Recovery (password change flow)

I managed to get the latter pretty simply, by selecting a “password stage” in the options for the default-authentication-flow. After that, users no longer need to click twice, they can sign in from a single page.

Next up is password recovery. When I try to click on “Create recovery link” so I can create recovery links for users, I get an error “Recovery flow not applicable to user”.

Openfga

Okay, I deployed it using the helm chart. I’m lookiing through the Incus docs. So it looks like Incus does the intial setup.

Then, once I do that, I need to call the API to update relations.

Also, I need to add a nodeport service so that Incus can access the server. After scrolling through a very interesting security audit:

The likelihood is rated low as OpenFGA is designed to be run within an intranet environment and not exposed to public internet.

And this is somewhat difficult with my setup. I deployed it on Kubernetes, with no firewall, and now I want to expose the service — but only to localhost.

Actually that also doesn’t work. It looks like coder instances are placed on the same ip address as my host machine, meaning they would have access to all network stuff that localhost would.

No, looking further, it does look like openfga does use authentication, and the reason why an intranet is recommended is in case of ddos attacks.

Here is my secret:

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

stringData:

values.yaml: |

postgresql:

auth:

postgresPassword: secretpassword

datastore:

uri: "postgres://postgres:secretpassword@openfga-postgresql.default.svc.cluster.local:5432/postgres?sslmode=disable"

authn:

preshared:

keys: ["secretkeyhere"]Now that I’ve deployed it, I need to configure it.

Firstly, to get my preshared key:

kubectl get secrets -n openfga openfga-secrets -o jsonpath='{.data.values\.yaml}' | base64 -d

(I actually managed to remember this without looking at my notes. I don’t know if I should be proud or scared).

nix-shell -p openfga-cli, or other methods of installing the openfga cli.

export FGA_API_URL=https://openfga.moonpiedumpl.ing

export FGA_API_TOKEN=secretkeyhere

fga create store incus — only needs to be done once.

[nix-shell:~/vscode/moonpiedumplings.github.io]$ fga store list

{

"continuation_token":"",

"stores": [

{

"created_at":"2025-05-30T22:28:45.160445043Z",

"id":"01JWHMZPF85VE8DWYHKV90QTCT",

"name":"incus",

"updated_at":"2025-05-30T22:28:45.160445043Z"

}

]

}export FGA_STORE_ID=01JWHMZPF85VE8DWYHKV90QTCT

export FGA_MODEL_ID=01JWHN0RS0QTJ4JJPJ659XCSPG

And then, I fill out the incus configuration values: openfga.api.token, openfga.api.url, and openfga.store.id with the appropriate values.

After this, running this command: fga model get --store-id=$FGA_STORE_ID outputs the incus openfga model.

[nix-shell:~/vscode/moonpiedumplings.github.io]$ fga tuple write --store-id=$FGA_STORE_ID group:layer8#member operator project:layer8

{

"successful": [

{

"object":"project:layer8",

"relation":"operator",

"user":"group:layer8#member"

}

]

}

[nix-shell:~/vscode/moonpiedumplings.github.io]$ fga query check --store-id=$FGA_STORE_ID --model-id=$FGA_MODEL_ID group:layer8#member operator project:layer8

{

"allowed":true,

"resolution":""

}Neat!

But this doesn’t actually work. I attempt to sign on with single sign on, and it takes me back to the Incus logged out page, and the only information is a vague errorr: Uncaught (in promise) TypeError: NetworkError when attempting to fetch resource.

I get a little more information when I authenticate with the command line:

[nix-shell:~]$ incus remote add main https://incus.moonpiedumpl.ing --verbose --debug --auth-type=oidc

DEBUG [2025-06-02T23:19:06-07:00] Connecting to a remote Incus over HTTPS url="https://incus.moonpiedumpl.ing:8443"

DEBUG [2025-06-02T23:19:06-07:00] Sending request to Incus etag= method=GET url="https://incus.moonpiedumpl.ing:8443/1.0"

Certificate fingerprint: b78c9aa9b22398a2255e93e4ba6a2e7f431c3f2e55503adb59a32e57f0471292

ok (y/n/[fingerprint])? y

DEBUG [2025-06-02T23:19:07-07:00] Connecting to a remote Incus over HTTPS url="https://incus.moonpiedumpl.ing:8443"

DEBUG [2025-06-02T23:19:07-07:00] Sending request to Incus etag= method=GET url="https://incus.moonpiedumpl.ing:8443/1.0"

URL: https://sso.moonpiedumpl.ing/device?code=828932864

Code: 828932864

Error: not authorizedThis is also vague, but something interesting shows up in the journald logs:

Jun 02 23:15:34 thoth incusd[1286]: time="2025-06-02T23:15:34-07:00" level=warning msg="Rejecting request from untrusted client" ip="130.166.192.112:12998"I found the error in the source code, but I don’t quite understand what’s going on.

After finding some discussion posts, I changed the oidc claim from preferred_username to email, and it works — kinda. It only works if I login and launch the web ui via the incus command line client (website still gets that error), and when I do so, I don’t have access to anything at all.

Also, incus seems to have an error in the logs, related to openfga:

Jun 09 17:33:15 thoth incusd[426476]: time="2025-06-09T17:33:15-07:00" level=error msg="Failed background OpenFGA resource sync" err="Failed to write tuple to OpenFGA store (user: \"server:incus\"; relation: \"server\"; object: \"certificate:025a3516ca2c622a93446548954e33d75eafa3e8173d0d6a435fc27d4072932e\"): Write validation error for POST Write with body {\"code\":\"validation_error\",\"message\":\"invalid TupleKey.Object: value does not match regex pattern \\\"^[^\\\\\\\\s]{2,256}$\\\"\"}\n with error code validation_error error message: invalid TupleKey.Object: value does not match regex pattern \"^[^\\\\s]{2,256}$\""fga tuple write --store-id=$FGA_STORE_ID group:layer8#member user server:incus

Still no dice. What about the admin role?

fga tuple write --store-id=$FGA_STORE_ID group:layer8#member admin server:incus

I also tried:

fga tuple write --store-id=$FGA_STORE_ID user:testuser admin server:incus, and variants including test@gmail.com (email address of my testuers).

Still doesn’t work, but I did learn something. It seems that openfga is not reading groups from ldap/oidc to figure out what groups users are in, but instead it has it’s own groups, that I have to add users to, as a relation.

Also, I switched the oidc claim back to preferred_username, instead of email, and it does show up as a different user:username in the openfga logs, instead of user:email.

Wait, that’s not true: If the user has server:admin permissions, then they can change server settings, even though they can’t see any instances or projects.

And if I give the user the operator relation to the layer8 project, then it can see only two of the 9 existing vitual machines :(

I have two theories:

This is something to do with accessing certain networks, specifically the

public0network I have, and thedefaultdisk pool. These aren’t considered under the “layer8” project, so they aren’t able to be accessed by the machine. It could also be the images, that are considered under project “default” and notThe Openfga relations are broken and need to be rebuilt.

Something else: Why am I trying to figure it out? It seems like sso users can access, view, create, and delete stuff, just not everything that is already there.

[nix-shell:~/vscode/moonpiedumplings.github.io]$ fga tuple write --store-id=$FGA_STORE_ID project:layer8 project profile:layer8/defaultThis enables my test user to see the layer8 default profile, but I really think this is the kind of relationship that should have been automatically added. And upon further searching, it seems that the two instances I care about, already are explicitly under the layer8 certificate.

~/vscode/moonpiedumplings.github.io> fga tuple write --store-id=01JWHMZPF85VE8DWYHKV90QTCT project:layer8 project instance:layer8/*

~/vscode/moonpiedumplings.github.io> fga tuple write --store-id=01JWHMZPF85VE8DWYHKV90QTCT project:layer8 project instance:layer8/Kal-TestSadly, the former doesn’t work, but the latter does. Also, it seems that when I move instances from one project to another, the openfga relations are created, allowing me to access stuff.

incus move --target-project default --project layer8 Kal-Test (the Instance must be stopped).

After creating a new storage pool, I see the relation that is missing:

"key": {

"object":"storage_pool:testpool",

"relation":"server",

"user":"server:incus"

},

"timestamp":"2025-06-16T23:29:34.933052608Z"Adding this relation to the other storage pools, and then it works.

Next up is networks, which do not load.

I tried:

[nix-shell:~]$ fga tuple write --store-id=01K001MQRKQ1BH74FZP9H1V89X server:incus server project:layer8This doesn’t work, but is probably needed anyways so I am keeping it here.

[nix-shell:~]$ fga tuple write --store-id=01K001MQRKQ1BH74FZP9H1V89X project:layer8 project network:default/internal0Now it works, and people with access to the layer8 network can access this subnet. Although this kind of control seems to be redundant with the way that projects have a built in way to restrict what networks they use.

Finally, I also need to control storage pools.

{

"key": {

"object":"storage_volume:layer8/layer8/virtual-machine/vmname",

"relation":"project",

"user":"project:layer8"

},

"timestamp":"2025-07-12T19:27:48.422892604Z"

},But this one was automatically added. Maybe Incus automatically syncs resources, and I don’t need to do it manually, I just need to wait?

Oh, and another issue I had is that after I rebooted my machine, all my openfga relations where lost. I forgot to deploy the openfga connected to the postgres, so no actual data was being stored persistently.

I also made the same mistake with coder earlier. In the future, I will delete my helmreleases and then redeploy them to ensure that the data is actually persistent.

I nuked the setup and attempted to reset it up again, but I ran into an issue. I tried some of the fixes in that thread, but they did not work (probably because ArgoCD is different from Flux).

What worked was a thread about flux, where essentially the problem is that flux waits for pods to be created before running post install jobs. But, sometimes pods can have a pod that doesn’t do anything but wait for the jobs to complete, so the waiting for the pods to be fully created must be disabled.

Drive

I am debating between Nextcloud, and other options.

Nextcloud:

- Popular

- But it’s in PHP, with an older architecture

- Extremely extendable, with many extensions/apps in their “store”

Opencloud

Forgejo

Firstly, here are relevant links:

https://artifacthub.io/packages/helm/forgejo-helm/forgejo

https://code.forgejo.org/forgejo-helm/forgejo-helm/src/branch/main/values.yaml — These are the actual values

https://forgejo.org/docs/latest/admin/config-cheat-sheet/ — and this is the forgeo cheat sheet, and all values in here can be set in the helm chart.

So what needs to be done is mainly the oauth/oidc login.

- Disable built in accounts (sign up, and log in)

- Oauth login

Another thing I want to do is deploy anubis to protect from scrapers. Due to the way that git forges seem to dynamically generate content, when scrapers attempt to scrape forges, there is a massive CPU load. A temporary workaround is I’m going to block viewing except for signed in users, but this will be a longer term solution.

To start, firstly it seemms like forgejo uses a syntax of existingsecret to be able to easily add secret data to the helm configuration.

Another thing I want to deploy is forgejo’s built in actions. However, according to the docs, it seems like actions are not ran in a virtual machine, like Github actions are. Instead they are ran in docker/podman/lxc containers, which is much, much weaker isolation from the host overall.

After some thought, I think the best way to do this is to not deploy a runner initially, but instead to deploy a virtual machine on Incus and connect it layer on. The most theoretically way to do this, I think, is to set up the docker-in-docker image to use the kata-containers runtime instead of normal docker, so the docker jobs are actually being ran in VM’s. I’m not really interested in a complex setup though, so I’ll just get it up first.

Right now I’ll just get forgejo up, but Incus provides Nixos images, and the forgejo runner has a nixos config.

Things were mostly going smoothly, until I was playing with oauth. I think that forgejo supports three different things that get conflated when I try to search for them:

- Acting as an Oauth2 client

- Logins via OpenID

- Acting as an Oauth2 provider

I am going through the forgejo docs right now (the website doesn’t support search so I cloneed the git repo), and it appears that being an Oauth2 client is not really documented. OpenID is barely documented beyond a brief mention, it’s only the Oauth2 provider that is extensively mentioned.

But I know that forgejo supports more Ouath configuration options beyond what the helm chart mentions like in this example

I suspect this is because setting up an Oauth2 provider via the UI is trivial and self explanatory. But I am not using the UI, so I found the relevant source code, and it explains the options.

The issue is the way I do secrets. I was copying the existingSecret syntax from the bitnami subcharts, but that does not work. Forgejo has two ways to do secrets. I dislike both of them, because I am trying to amke as much of my infra public as possible, except for the keys themselves. But with redis, I am forced to have the host option, which includes a password, be encyrpted in it’s entireity.

Well, I could use variables, but those also add complexity. After some pondering, I think the best way to do it is to just have commented out versions that do not include the sensitive data.

So I map out all the options, fix some minor issues, and I think the forgejo pod fials to deploy because I have “hostport” set, which doesn’t work if forgejo is doing a rootless deployment.

To fix:

security:

containerSecurityContext:

sysctls:

- name: net.ipv4.ip_unprivileged_port_start

value: "0"This doesn’t work, because apparently it only affects the pod’s network namespace, and not the host.

Another way to do this is by using a nodeport or loadbalancer service, but the reason why I am not a fan of those is that due to klipper-lb, all connections to ssh that way would appear to have the ip address of my server machine, rather than the ip address of the user.

I guess the best way to do this is to expose extra ports using ingress-nginx, and then use ingress-nginx to expose a tcp service. One problem is that the method to expose tcp services is specific to ingress-nginx, and not general.

│ init-app-ini An app.ini file already exists. To prevent overwriting secret keys, these settings are dropped and remain unchanged: │

│ init-app-ini - security.INTERNAL_TOKEN │

│ init-app-ini - security.SECRET_KEY │

│ init-app-ini - oauth2.JWT_SECRET │

│ init-app-ini - server.LFS_JWT_SECRETAre these settings important enough for me to set manually? According to the docs they are

Also, I think that the configuration settings are not changed to the default if you unset them in the helm settings, they stay what they are. So I must explicitly change them.

2025/07/25 06:52:18 ...c/net/http/server.go:2294:ServeHTTP() [I] PING DATABASE postgres

2025/07/25 06:52:18 ...eb/routing/logger.go:102:func1() [I] router: completed GET /api/healthz for 130.166.79.34:49228, 200 OK in 0.8ms @ healthcheck/check.go:67(healthcheck.Check)

2025/07/25 06:52:20 ...dules/auth/common.go:18:UnmarshalGroupTeamMapping() [E] Failed to unmarshal group team mapping: ReadMapCB: expect { or n, but found m, error found in #1 byte of ...|map[layer8:|..., bigger context ...|map[layer8:map[csun:[layer8]]]|...

2025/07/25 06:52:20 .../auth/linkaccount.go:294:LinkAccountPostRegister() [E] SyncGroupsToTeams: ReadMapCB: expect { or n, but found m, error found in #1 byte of ...|map[layer8:|..., bigger context ...|map[layer8:map[csun:[layer8]]]|...This error happened becasue the oauth “group mappings” where wrong. I quote them and it works.

But the group mappings are not actually replied. When I configure authentik to restrict logins to anybody who has a groups claim in their scope, my test user cannot log in at all. Either Forgejo is not collecting the groups claim, or authentik is not giving it.

From the authentik logs:

{"auth_via": "session", "domain_url": "sso.moonpiedumpl.ing", "event": "Application requested scopes not configured, setting to overlap", "host": "sso.moonpiedumpl.ing", "level": "info", "logger": "authentik.providers.oauth2.views.authorize", "pid": 70086, "request_id": "aabf16600fcb46e587f201bdec77a769", "schema_name": "public", "scope_allowed": "{'email', 'profile', 'openid'}", "scope_given": "{'email', 'groups', 'openid'}", "timestamp": "2025-07-26T11:23:10.187861"}Also, attempting to ask for the preferred_username claim, it also fails. But the email claim works. So essentially, nothing from the profile scope works, even when I explicitly ask for it.

OPENID_CONNECT_SCOPES: "openid email groups"

I think this was the problem, it doesn’t have the profile scope. After removing this config, the group mapping works (but I must create the organization and group in advance).

cope": "email profile openid openid", "state": "825aa86d-46bf-4ce6-8565-3a92069a3780"}, "method": "GET", "path": "/application/o/authorize/", "request_id": "c59d08ff43a647da80c3037a453d9c4

a", "user_agent": "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0"}, "scopes": "email profile openid"}, "domain_url": "sso.moonpiedumpl.ing", "event": "Created Even

t", "host": "sso.moonpiedumpl.ing", "level": "info", "logger": "authentik.events.models", "pid": 91040, "request_id": "c59d08ff43a647da80c3037a453d9c4a", "schema_name": "public", "timestam

p": "2025-07-27T06:42:40.711920", "user": {"email": "test@gmail.com", "pk": 7, "username": "testuser"}}It seems to try to get the openid scope twice though, so I removed it from the config again.

Okay, I tried to start forgejo today and I got an error about not being able to find the database. What happened, is that the Bitnami Helm Charts that deploy the databases, are no longer publicly available for use. Forgejo’s Helm Chart now recommends operators for databases.

So I am searching for some options for operators for postgres:

- CloudNativePG

- Most promising, also newest

- Semi-automatic major version upgrades are appealing to me: https://cloudnative-pg.io/documentation/1.26/postgres_upgrades/#offline-in-place-major-upgrades

- Crunchy Postgres Operator

- Zalando

For redis… I think I am going to switch to the internal cache implementation instead. Although this results in an error, and I have to put a puase on forgejo for now.

Pangolin

Pangolin, is a reverse proxy similar to cloudfare tunnels, and also offers VPN capabilities, similar to Netbird. I am very interested in it becuase it offers a multi-tenant VPN solution, which is a paid feature in Netbird.

But, they offer no kubernetes deployment by default. I will be converting their docker compose deploy to a k8s deployment.

Cloud Native Postgres

https://artifacthub.io/packages/helm/cloudnative-pg/cloudnative-pg

I started out by just copying the repo and helm release templates that I have previously used. It works easily, without me passing in any values. It seems that postgres does not require any configuration.

Firstly, deployed a test database using an example from their quick start. However, I deployed only one instance, instead of 3, because I only have one physical server.

Although cool, that’s not enough. I also need to ensure it has the correct username/password, and that it restores the information that was previously in older postgres database that forgejo was using.

forgejo data-forgejo-postgresql-0 Bound pvc-9a77499a-71d8-4a7b-bda4-fd8d66c1c86c 10Gi RWO openebs-persistent-hostpath <unset> 25d

Thankfully, using openebs local paths, makes it easy to actually go in and fine the database files.

root@thoth:/var/openebs/persistent/pvc-9a77499a-71d8-4a7b-bda4-fd8d66c1c86c/data# ls

base pg_commit_ts pg_ident.conf pg_multixact pg_replslot pg_snapshots pg_stat_tmp pg_tblspc PG_VERSION pg_xact postmaster.opts

global pg_dynshmem pg_logical pg_notify pg_serial pg_stat pg_subtrans pg_twophase pg_wal postgresql.auto.confNow, I need to figure out how to import from this. I think what I’m going to have to do, is run a postgres docker container, and then dump the database, so I can import into a new database.

Firstly, I will backup the files to ensure that anythign I do doesn’t nuke all my data.

root@thoth:/var/openebs/persistent/pvc-9a77499a-71d8-4a7b-bda4-fd8d66c1c86c# tar -czf data.tar.gz data/

I also ran a test to extract the archive, to make sure that worked. Now that I’ve done this.

In addition to that, I will need to create a cluster and a database to import the data into.

But when I attempt to start a database using the postgres docker container, it complains that certain config files (like postgres.conf, pg_hba.conf) are not found. Bitnami’s helm version of postgres seems to automatically create these files somewhere else than the data directory.

Although possible to create those, I think an easier way might be to deploy the older version of forgejo, and then export the data from there using the pgsql cli. It errored due to ownership at first, so I had to run chown.

root@thoth:/var/openebs/persistent/pvc-9a77499a-71d8-4a7b-bda4-fd8d66c1c86c# chown -R 1001:1001 data

And with this, forgejo (and the database I care about) is finally up. I can shell into it, and run pg_dump.

I have no name!@forgejo-postgresql-0:/bitnami/postgresql/backupdir$ pg_dump -U forgejo -f dump.sql

Password:

I have no name!@forgejo-postgresql-0:/bitnami/postgresql/backupdir$ ls

dump.sqlNext up is to deploy the new database. One of the interesting things about cloudnativepg is that it doesn’t just deploy a database, it deploys a cluster, and then deploys databases to that cluster. But, is it better to deploy one cluster with multiple databases, or multiple clusters?

Architecture of postgres cluster/database:

- https://cloudnative-pg.io/documentation/1.17/architecture/

- https://www.cncf.io/blog/2023/09/29/recommended-architectures-for-postgresql-in-kubernetes/

One thing I found via some preliminary research is that CloudNativePG’s backup solutions seem to be cluster based, rather than database based. They have some harsh words about database dumps, anyways:

This section covers physical backups in PostgreSQL. While PostgreSQL also supports logical backups using the pg_dump utility, these are not suitable for business continuity and are not managed by CloudNativePG. If you still wish to use pg_dump, refer to the Troubleshooting / Emergency backup section for guidance.

I think the best option is to have a cluster for each app. This way I could back up each app’s database/cluster, using CloudNativePG’s tools. This backup might not just be for recovery purposes, but also I might want an older version of the database at some point.

Another thing is that it seems that the Cluster resource can automatically initialize a database, using configuration found here. The way that secrets work is documented here.

Also, when cloudnativepg deploys a cluster, it automatically creates kubernetes services for the database.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

anubis-forgejo ClusterIP 10.43.225.133 <none> 80/TCP 42d

forgejo-postgres-r ClusterIP 10.43.188.149 <none> 5432/TCP 5s

forgejo-postgres-ro ClusterIP 10.43.26.224 <none> 5432/TCP 5s

forgejo-postgres-rw ClusterIP 10.43.254.130 <none> 5432/TCP 4sThis is pretty neat, you can have readwrite and readonly versions of services. I think I will disable everything but the readwrite serivce, however.

Although cool, I found that even though cloudnativepg creates a persistentvolumeclaim, it deletes it when it is done. This is bad because it means that if I delete the postgres cluster I lose the data. It looks like what you are expected to do, is to backup the

Another thing to not about storage, is that postgre does not need journaling. I should probably create a custom PersistentVolumeClass that has writeback/journaling disabled for that folder only. But that seems to not be possible, so instead I will use openebs block volumes, which are designed for this use case.

Also, it turns out that the local.csi.openebs.io is the newer provisioner, and supports more features than the older openebs.io/local that I was using. It also looks like migration is not trivial, so I will probably only use the newer interface for just this.

It does look like I need to have an actual LVM partition/disk available on my host. In addition to that, I also think I may need to update openebs to get access to the newer openebs.io/local chart.

Okay, this really sucks, but Bitnami helm charts break tomorrow (today is 8/27/2025). I need something to keep these services up, and that means forgoing an OpenEBS update, and using a journaled filesystem for my databases. I do at least want backups, so I will investigate how to do those. When combined with simultaneously trying to figure out why my network is wonky, I just don’t have enough time. I suppose stuff like this is why real engineers work as teams.

The problem is backups. CNPG supports two methods, one of which is an object store, and another is via volume snapshots. I wanted to avoid setting up an external object store, especially from the paid platforms.

I wanted to use snapshots, but openebs hostpath does not support volume snapshots. And I don’t have enough time to build and test backups. I think I will use pg_dump, despite them “not being suitable for business continuity”.

It looks like there is no easy way to have the PVC’s not delete themselves either. It seems that what you are expected to do is really to bootstrap a new cluster from the backups, like documented here.

Okay, I think I could use openebs-rawfile for some of those features like snapshots.

03:21 < moonpie> okay I get a little bit more time to think

03:22 < moonpie> Like I am considering either zfs or lvm on my extra 4 tb hard drive and then using that for both incus storage and k8s volumes

03:22 < moonpie> (wait no I don't actually get time to think they want forgejo up)

05:27 < moonpie> dumbmf_: okay now it says I need to enable javascript...

05:51 < moonpie> okay

05:51 < moonpie> except for openebs rawfile, I cannot find any solution for block/no filesystem based csi's

05:51 < moonpie> it's kinda weird

05:52 < moonpie> because if you are hosting a dtabase on k8s, it makes sense to not uses a clustered filesystem, because db's do it better

05:52 < moonpie> and they also recommend no journaling/copy on write for performance, and again, because dbs do it better

05:52 < moonpie> but all of the raw block csi options I have found require lvm, zfs, or other partitions/disks to be provisioned

05:53 < moonpie> Like.. why can't I just have one disk and you create loopback devices?

05:53 < moonpie> that's what openebs rawfile does, but it's not cooked yet

05:53 < moonpie> I guess you could say it's a bit... raw

05:54 < moonpie> but the thing is

05:54 < moonpie> even if I forgo no-filesystem based setups

05:55 < moonpie> I still want a hostpath storage class that uses CSI's, so I can use the CSI snapshot/backup feature to handle databases

05:55 < moonpie> CloudnativePostgres attempts to delete the persistentvolume even if you have the policy set to retain — what they expect you do when you spin up a new cluster is just restore from backup, not reattatch an existing

volume

05:55 < moonpie> But I haven't been able to find a hostpath based CSI either

05:57 < moonpie> There is a reference implementation, but it says it's "not production ready"

05:57 < moonpie> https://github.com/kubernetes-csi/csi-driver-host-path

05:57 < moonpie> well, it actually does seem to be less usable, then say, alpha software

06:00 < moonpie> I didn't want to use either zfs or lvm

06:00 < moonpie> my main concern was configuration as code

06:00 < moonpie> I want as much as my config in the git repo as possible

06:01 < moonpie> creating a zfs/lvm pool is not that. I can do it ofc, but it's not really what I want

06:02 < moonpie> I was thinking, if I had to redo this setup in the future, I could use Nixos for declarative config of incus and then use https://nixos.wiki/wiki/Disko

06:03 < moonpie> Idk. I feel like there is something i am missing

06:04 < moonpie> like if you have one disk and you want to run a database on k8s, what are you dong?

06:04 < moonpie> Does everybody just use lvm or zfs on their root?I think the correct choice here, is to deploy longhorn, with a single replica. And then use that for block storage. That’s also probably what people are doing in the real world. Such a setup reuses the clustered storage you are already using, but in a slighly different configuration that enables you do maximize the performance of your databases.

I also ran an intemediary check, to make sure that openebs hostpath does not support block volumes, and it does not. I attempt to create a longhorn block device for cloudnativepg, and that fails, because I guess it expects a filesystem.

This doesn’t really make sense to me. But I did get cloudnativepg deployed. I can even request a backup via a volumeshapshot:

kubectl cnpg backup -m volumeSnapshot -n forgejo forgejo-postgres

They don’t seem to have a command to restore from a backup though. It seems like you are expected to bootstrap a cluster from a backup instead.

To load the database, first I cp the dump in from my laptop:

[moonpie@cachyos-x8664 dormlab]$ kubectl cp dump.sql -n forgejo forgejo-postgres-1:/var/lib/postgresql/data/dump.sql

Defaulted container "postgres" out of: postgres, bootstrap-controller (init)Then, inside the container I load the dump:

postgres@forgejo-postgres-1:~/data$ psql -U postgres -d forgejo -f dump.sqlAnd then I spin up forgejo again, pointing to the new database, and it’s finally up.

I have decided to forgo automatic backups, because there there is no easy way to have a retention policy with volumesnapshots.

Instead, I will just do occasional manual snapshots.

LLMs

I am currently looking into options. I want OIDC sign in, and RAG.

I see for frontend options:

- Openwebui

- Non open source license, but it’s only restricting the ability to rebrand the UI

- Helm chart provided

- Librechat

- https://github.com/Mintplex-Labs/anything-llm

- https://github.com/Cinnamon/kotaemon

- https://github.com/ItzCrazyKns/Perplexica

- Designed as mainly an alternative to perplexity than a full chatgippity alternative

- https://github.com/oobabooga/text-generation-webui

For backend options:

- Ollama

- https://github.com/Nexesenex/croco.cpp

- Fork of KoboldAI

- Llmariner

- Okay, this seems to require the Nvidia operator and an s3 compatible object store

- https://github.com/mudler/LocalAI

- This one is cool because it seems to let you select between multiple backends, like llama, exllama, and more

- It also explicitly supports cpu only, intel, amd, and vulkan. Although not relevant to my usecase, this is very interesting.

After some consideration, I decided to deploy Open Web UI. It appears to be the most comprehensive, and has an official helm chart.

Here are the helm chart values: https://github.com/open-webui/helm-charts/blob/main/charts/open-webui/values.yaml

It appears that a subchart of the ollama application is included in openwebui chart, as well. I deploy a fairly simple config, and then I get an error when attempting to use OIDC to sign in:

"You do not have permission to access this resource. Please contact your administrator for assistance."

This was fixed by ensuring that the users in groups I wanted to be able to connect were set in “allowedRoles”.

Another concern I have is that ollama does not have any form of authentication Although less of a concern in the real world, wehre the inside fo teh kubernetes cluster is considered trusted, in my lab, I give people access to the internal subnet. I could restrict traffic flows to this service via Network Policies, but I would rather add some form of authentication to the service. Here is an example using caddy.

I decided to use Caddy. I stopped using the ollama subchart, because I wanted to manually feed an ollama url to openwebui instead of letting openwebui guess.

[moonpie@cachyos-x8664 flux-config]$ nix-shell -p caddy genpass

[nix-shell:~/vscode/flux-config]$ genpass -dlu

generatedpassword

[nix-shell:~/vscode/flux-config]$ caddy hash-password

Enter password:

Confirm password:

$2a$14$LdqC7FEIx2w6arGCNw7Pi.qxe2kFSvBecz.IvtdcdLvJe4V.xeyhiThis gets me a password and a hash to use. I feed that to the config, and my default ollama url looks something like this: http://ollama:secretpassword@ollama.owui.svc.cluster.local:11434.

Another thing that annoyed me is changing the openwebui pipelines api key to something more secure by deafault. The necessary environment variable, PIPELINES_API_KEY does not seem to be documented, but I found it on a github issue.

Okay, after the rebuild where my hard drives died, I made some changes to authentik, where user accounts no longer have emails.

But many applications, like Openwebui, expect email. On the OpenWebui docs, they mention an environment variable, ENABLE_OAUTH_WITHOUT_EMAIL, that is necessary for OAUTH users without email to sign in.

It doesn’t work. The config option is not real. Despite being mentioned in a github issue, I could not find it in the source code.

While reading the source code of where it should be present, I notice something that looks relevant: ENABLE_OAUTH_EMAIL_FALLBACK.

This config option, when set to true, and OAUTH_SUB_CLAIM is set to preferred_username, makes everything work.

Models

I do need to select a small set of good models. I am designing for a multi user system, meaning if too many people have too different models, I will run out of ram.

One thing that is cool, is that I am not limited to using models from ollama’s repo, as ollama can download models from huggingface and OCI repos.

Although, one irritating I have noticed is that the model download doesn’t continue if I close the tab. I must leave the tab open for the model download to complete, despite the fact that the download is handled by ollama and doesn’t depend on anything from my browser session. Thankfully, it seems to save partial progress and does restore when I start it up.

I’m also interested in the new-ish embeddinggemma:300m model.

Ingress

Ingress-Nginx

Okay, I thought I was done configuring Ingress-Nginx, until I logged into my Authentik and saw that all the logins appeared to be coming from the IP address of my host machine rather than the actual client IP of people who are connecting.

Fiddling with it a little, I found an issue relevant to my setup: https://github.com/k3s-io/klipper-lb/issues/31

Another relevant discussion: https://github.com/k3s-io/k3s/discussions/2997#discussioncomment-1617536

It turns that out klipper, the k3s inbuilt load balancer, does not forward the real client ips to services.