Building my server part 3 — The switch to debian

Why the switch?

Recently, I have been very busy working on scripts and ansible playbooks for the Collegiate Cyber Defense Competition.

In order to test those playbooks, I have been using Vagrantfiles, as an excerpt, something like this:

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.synced_folder ".", "/vagrant", disabled: true

config.vm.provision "shell", path: "scripts/packages.sh"

config.vm.define "318" do |vmconfig|

vmconfig.vm.provision "ansible" do |ansible|

ansible.playbook = "ansible/inventory.yml"

end

vmconfig.vm.box = "generic/alpine318"

vmconfig.vm.provider "libvirt" do |libvirt|

libvirt.driver = "kvm"

libvirt.memory = 1024

libvirt.cpus = 2

libvirt.video_type = "virtio"

end

vmconfig.vm.provider "virtualbox" do |virtualbox,override|

virtualbox.memory = 1024

virtualbox.cpus = 1

end

end

endThis is from the ccdc-2023 github repo. With a single command, vagrant up, I can create virtual machines, and then provision them with ansible playbooks.

Even more impressive, I can use the generated ansible inventory manually, as noted in the ansible documentation. This creates an environment closer to how I would actually use the vagrant ansible playbooks.

Vagrants snapshots let me easily freeze and revert machines to previous features.

I used vagrant, in combination with windows vagrant machines to easily test things for the ccdc environment guide.

However, when attempting to up vagrant on my Rocky Linux machine, I got an error: Spice is not supported.

Apparently, Red Hat Enterprise Linux deprecated spice, and now any rebuilds of it, no longer have spice as well.

https://forums.rockylinux.org/t/spice-support-was-dropped-in-rhel-9/6753

https://access.redhat.com/articles/442543 (requires subscription, but the important content is in the beginning).

Except, my Arch Linux machines can still run spice virtual machines just fine… so when I want to run a Vagrant box(s) on my server, like the Windows boxes which require much more memory, I can either write around this missing feature, using the much less performant VNC… or I can switch.

The main reason why I picked Rocky, was the 4 year support of an operating system supported by Kolla-ansible. This is no longer the only case.

As of the current supported Kolla-Ansible, it now supports Debian 12, which will be supported for all 4 years of my bachelors. Support Matrix.

With this, I decided to switch to debian… but I encountered some challenges.

Installing Debian

When I installed Debian in UEFI mode, it wouldn’t boot. But Rocky Linux booted just fine? Why?

I tested a legacy install, and it booted just fine. Did a little more research, asked for help from a friend, and found this:

According to the debian wiki some UEFI motherboards do not implement the spec properly (even after a BIOS update, it seems). Described in that article, is how to get around this quirk, and why they don’t do that by default.

All OS installers installing things to this removable media path will conflict with any other such installers, which is bad and wrong. That’s why in Debian we don’t do this by default.

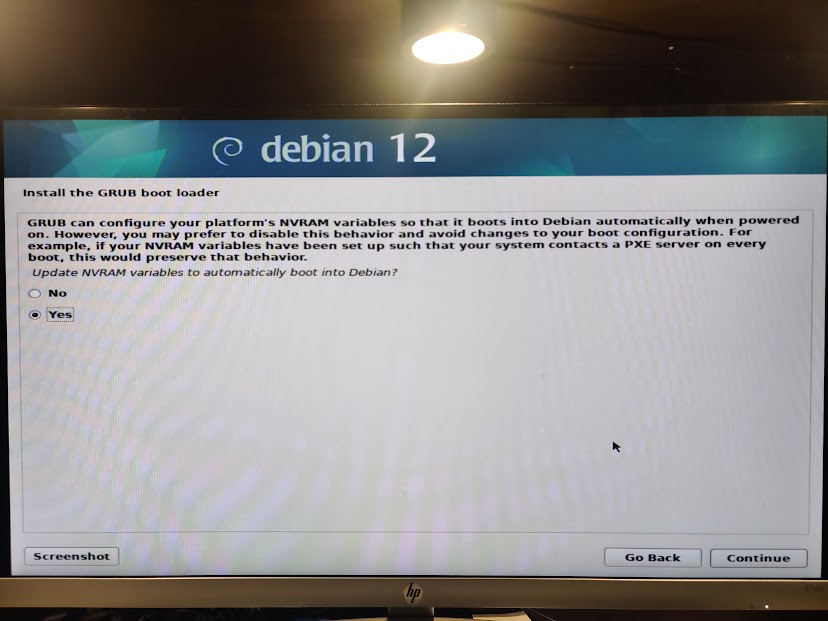

But, it was only after selecting the “expert install” in the installer, that I was eventually presented with this menu:

And by forcing a grub install, I finally got it to boot in UEFI mode.

In my opinion, the debian installer should do this as a default if it detects that it is going to be the sole OS. I would rather have a booting install than a standards compliant one.

Although, it was suprising and disheartening to learn that what I considered to be an enterprise server doesn’t implement a standard such as this properly.

Configuring

I don’t want to do openstack just yet. After getting experience with keycloak, active directory, and ldap, I’ve decided that this server can be the host to a variety of services, all using ldap or SSO to sign on. I want a remote development environment, not just for me, but also for my team members.

The other thing I want is for the server to be configured as code. Past the initial setup (podman, libvirt, nix), I want everything to be configured via ansible.

Goals:

Overall, system design goals

- Configuration as code

- Rootless containers when possible

- No docker on bare metal — this interferes with the eventual open stack install

- Security

- Https should be terminated on my home server, not on my vps

Service/Specific goals for the short(er) term:

- Podman

- Nvidia runtime, for kasm hardware acceleration, and AI

- LDAP

- Do I want openldap or lldap?

- keycloak

- Connected to ldap, of course

- everything should be SSO when possible

- Kasmweb

- Run this in a privileged podman container rather than docker is my only real option

- Create kasm containers for development environment for my teams

- Nix in kasm docker containers

- Hardware acceleration via Nvidia?

- Mounting /dev/kvm inside for libvirt inside? Or remote libvirt

Later, I want:

- Forgejo — a source forge

- Move my blog to this server, from github pages

- Continue to use some form of CI/CD for it.

Reverse Proxies and HTTPS

To ensure maximum security, I need to terminate HTTPS on the home server, which is completely under my control, unlike the VPS I’m renting from Contabo.

Currently, I have a simply setup using nginx proxy manager, where it reverse proxies any sites I want to create by terminating TLS/SSL on the VPS.

I don’t really feel like going back to pure nginx, it was very painful to configure, which is why I switched to the easier frontend, nginx proxy manager (npm).

I attempted to set up a stream, but I was simply redirected to the “Nginx Proxy Manager” page instead.

I bought a domain: <moonpiedumpl.ing>. It looks very clean. I will use subdomains for each service, something like <keycloak.moonpiedumpl.ing> will be the keycloak service, and so on.

I want a declarative reverse proxy, that can reverse proxy existing https servers, without issues. Caddy can probably do this.

I think the crucial thing is that is that Caddy some of the reverse proxy headers by default. It’s documented here: https://caddyserver.com/docs/caddyfile/directives/reverse_proxy#defaults

So the second Caddy proxy, located on the internal server, would have the option “trusted proxies”, set to the ip address that the internal server sees coming from the VPS. This will cause it to not edit the headers, allowing the internal server to see the real ip address of the machines connecting to the services.

Caddy can also configure https automatically: https://caddyserver.com/docs/caddyfile/options#email

As for TLS passthrough on the external Caddy service, I found some relevant content:

https://caddy.community/t/reverse-proxy-chaining-vps-local-server-service-trusted-proxies/18105

Alright. This isn’t working. Double reverse proxies are a pain to configure, plus they seem to be lacking support for some things.

So… alternatives?

It seems to forward traffic as it, which is good, because I don’t want it to get in the way of caddy.

I also need to test iptables as a reverse proxy. I don’t want to test this, as it requires that I tear down some of my declarative configuration to temporarily test this in an imperative manner.

Alternatively, I am looking at iptables.

There are several relevant stackoverflow answers: this one and this one.

I did a little bit of experimenting with iptables, but it looks to be more effort than it is worth to configure, for something that might not even work.i

I started looking through this: https://github.com/anderspitman/awesome-tunneling

Projects like frp appeal to me and look like they do what I want to.

Out of them, rathole appeals to me the most. The benchmarks advertise high performance, and it has an official docker container. This would make it easy to deploy.

I don’t like the existing rathole docker images. The official one is older than the code is, and other docker container uses a bash script reading enviornment variables to configure itself.

I’ve decided to compile rathole myself. I want a static binary I can copy over to an empty docker container, and then docker can handle the service managemnt.

I want to compile rathole myself. Originally I was going to compile rathole statically on an alpine container, but I kept encountering issues, so I decided to use the debian container (that is one of the solutions I saw on the answer sites)

FROM rust:latest as stage0 # Debian bookworm

ENV RUSTFLAGS="-C target-feature=+crt-static"

RUN apt install musl-tools

RUN git clone https://github.com/rapiz1/rathole

RUN rustup target install x86_64-unknown-linux-musl

RUN cd rathole && cargo build --release --target x86_64-unknown-linux-musl

FROM scratch

COPY --from=stage0 /rathole/target/x86_64-unknown-linux-musl/release/rathole /bin/rathole

CMD ["/bin/rathole"]It uses a multi stage build to copy the statically compiled binary to the final container.

Podman

Rootless

Things were going smoothly, until I encountered a roadblock:

TASK [Create caddy directory] ************************************************************************************************************

fatal: [moonstack]: FAILED! => {"msg": "Failed to set permissions on the temporary files Ansible needs to create when becoming an unprivileged user (rc: 1, err: chmod: invalid mode: ‘A+user:podmaner:rx:allow’\nTry 'chmod --help' for more information.\n}). For information on working around this, see https://docs.ansible.com/ansible-core/2.16/playbook_guide/playbooks_privilege_escalation.html#risks-of-becoming-an-unprivileged-user"}The relevant ansible code uses become to run as the podmaner user, and then runs the ansible podman container module.

It links to some docs here: https://docs.ansible.com/ansible-core/2.16/playbook_guide/playbooks_privilege_escalation.html#risks-of-becoming-an-unprivileged-user

This was fixed after I installed the acl package.

Pods

Podman has “pods”. These are somewhat similar to docker networks, which I prefer.

With docker networks, the “network” has a dns, and containers can find eachother by the container name. I enjoyed this with nginx proxy manager, because then I could just forward “containername:port” and it would be easy.

Podman pods work a bit differently. Every pod can contain multiple containers, but they share a network interface. I tested this with two alpine containers, even if I bind a port to localhost, then the other container can still access it.

I am using ansible to create pods.

Systemd Integration

Ansible can generate systemd units for pods and containers:

- Pods: https://docs.ansible.com/ansible/latest/collections/containers/podman/podman_pod_module.html#parameter-generate_systemd

- Containers: https://docs.ansible.com/ansible/latest/collections/containers/podman/podman_container_module.html#parameter-generate_systemd

Rather than starting the containers using ansible, I can then start the whole pod using the systemd unit for the pod, and ansible’s systemd service module. (From my testing, if you start a pod, it also starts all containers in the pod).

In order for this to work, I need to have systemd user services configured and working. However, I get an error:

podmaner@thoth:~$ systemctl status --user

Failed to connect to bus: No medium foundA bit of research says that this is because the systemd daemon is not running… but it is:

root@thoth:~# ps aux | grep "systemd --user"

podmaner 1095 0.0 0.0 18984 10760 ? Ss 13:33 0:00 /lib/systemd/systemd --user

moonpie 1513 0.1 0.0 19140 10780 ? Ss 13:33 0:00 /lib/systemd/systemd --user

root 1648 0.0 0.0 6332 2036 pts/6 S+ 13:35 0:00 grep systemd --userIt works on my moonpie user:

moonpie@thoth:~$ systemctl status --user

● thoth

State: running

Units: 139 loaded (incl. loaded aliases)

Jobs: 0 queued

Failed: 0 units

Since: Sat 2024-04-20 13:33:22 PDT; 1min 27s ago

systemd: 252.22-1~deb12u1

CGroup: /user.slice/user-1000.slice/user@1000.service

└─init.scope

├─1513 /lib/systemd/systemd --user

└─1514 "(sd-pam)"I found an askubuntu answer which has the solution I need. The variable $XDG_RUNTIME_DIR and $DBUS_SESSION_ADDRESS need to be set — however, in my testing, only the former actually needed to be set.

Ansible’s documentation on user services actually addresses this.

- name: Run a user service when XDG_RUNTIME_DIR is not set on remote login

ansible.builtin.systemd_service:

name: myservice

state: started

scope: user

environment:

XDG_RUNTIME_DIR: "/run/user/{{ myuid }}"The environment dictionary sets environment variables for a task.

And then of course, when configuring and testing, I like the solution as suggested by the stackoverflow answer:

sudo machinectl shell user@ to log into a local user with dbus and environment variables properly set up.

Now, I simply have to ensure that generated systemd unitfiles for containers go in the right place, $HOME/.config/systemd/user/, like so:

- name: Authentik Redis database

containers.podman.podman_container:

name: authentik_redis

pod: authentik_pod

image: docker.io/library/redis:alpine

command: --save 60 1 --loglevel warning

env:

AUTHENTIK_SECRET_KEY: "{{ authentik_secret_key }}"

volumes:

- "{{ homepath.stdout }}/authentik/redis:/data"

generate_systemd:

restart_policy: "always"

path: "{{ homepath.stdout }}/.config/systemd/user/"And the services shows up in the systemd user session:

podmaner@thoth:~/.config$ systemctl --user status container-authentik_redis.service

○ container-authentik_redis.service - Podman container-authentik_redis.service

Loaded: loaded (/home/podmaner/.config/systemd/user/container-authentik_redis.service; disabled; preset: enabled)

Active: inactive (dead)

Docs: man:podman-generate-systemd(1)However…

$HOME/.config/systemd/user/container-authentik_redis.service

[Unit]

Description=Podman container-authentik_redis.service

Documentation=man:podman-generate-systemd(1)

Wants=network-online.target

After=network-online.target

RequiresMountsFor=/tmp/podman-run-1001/containers

[Service]

Environment=PODMAN_SYSTEMD_UNIT=%n

Restart=always

TimeoutStopSec=70

ExecStart=/usr/bin/podman start authentik_redis

ExecStop=/usr/bin/podman stop \

-t 10 authentik_redis

ExecStopPost=/usr/bin/podman stop \

-t 10 authentik_redis

PIDFile=/tmp/podman-run-1001/containers/vfs-containers/5fed7e5a1116899a7e1915793f0f8d3bd0f09c1884e8d574138a72b55964211f/userdata/conmon.pid

Type=forking

[Install]

WantedBy=default.targetpodman generate systemd doesn’t actually generate quadlets for systemd, but rather a unit user service that calls podman. This is problematic because I was expecting quadlets to be used because quadlets define the container themselves in the unit file.

Without quadlets, podman manages it’s own state, which has issues, and was the entire reason I was looking into alternatives to podman for managing state.

More research: https://github.com/linux-system-roles/podman: I found an ansible role to generate podman quadlets, but I don’t really want to include ansible roles in my existing ansible roles. Also, it intakes kubernetes yaml, which is very complex for what I am trying to do. At that point, why not just use a single node kubernetes cluster and let kubernetes manage state?

However:

Quadlet, a tool merged into Podman 4.4

podmaner@thoth:~/.config/systemd/user$ podman --version

podman version 4.3.1My Debian system doesn’t support quadlets. I couuld use nix or something else to get a newer version of podman, since I am doing rootless, but that feels like way to much complexity for what will ultimately not be a permanent solution, as I plan to eventually move to a kubernetes cluster.

Later, I did discover that ansible supports writing quadlet files, rather than creating a container:

From ansible docs on podman_container:

quadlet - Write a quadlet file with the specified configuration. Requires the quadlet_dir option to be set.

I still don’t think I’m going to use quadlets, but the option is nice.

Rather, I opted for something else: Generating a systemd service using ansible.

From my testing, starting a pod using podman start pod-name starts all containers in a pod, so I can simply generate a container for systemd service for each pod and then when that service is started (or stopped), it also controls all containers in the pod.

- name: Create relevant podman pod

containers.podman.podman_pod:

name: authentik_pod

userns: keep-id:uid=1000,gid=1000

state: created

generate_systemd:

restart_policy: "always"

path: "{{ homepath.stdout }}/.config/systemd/user/"Then, I can use ansible’s systemd service module to start the pod, and all the containers in it… except not.

$HOME/.config/systemd/user/pod-authentik_pod.service

# pod-authentik_pod.service

# autogenerated by Podman 4.3.1

# Sat Apr 27 16:07:36 PDT 2024

[Unit]

Description=Podman pod-authentik_pod.service

Documentation=man:podman-generate-systemd(1)

Wants=network-online.target

After=network-online.target

RequiresMountsFor=/tmp/podman-run-1001/containers

Wants=

Before=

[Service]

Environment=PODMAN_SYSTEMD_UNIT=%n

Restart=always

TimeoutStopSec=70

ExecStart=/usr/bin/podman start f596f20d51c2-infra

ExecStop=/usr/bin/podman stop \

-t 10 f596f20d51c2-infra

ExecStopPost=/usr/bin/podman stop \

-t 10 f596f20d51c2-infra

PIDFile=/tmp/podman-run-1001/containers/vfs-containers/f3f084bceb1041e2a9aa18a9b8088de1efa6ff6ea7bb81f0fbfb4bf8fd1558d2/userdata/conmon.pid

Type=forking

[Install]

WantedBy=default.targetRather than podman start pod-name, it just starts the infrastructure for that pod, meaning the containers in the pod itself aren’t started. Same goes for stopping pods as well. Although I suspect I can manage pods using systemd, similar to containers, podman’s systemd unit file generation doesn’t do that.

So, now what?

I see two options:

- Line/word replace the systemd file to reference the pod itself, rather than the infrastructure pod

- Generate systemd unit files for each container, and then start those

Both of these suck. The first is hacky, and the second is way to wordy and probably redundant.

Why isn’t there an easier way to start an entire pod at once, rootless?

Podman doesn’t support this. But do you know what does? Kubernetes. I think it’s time too switch. I’ve had too many issues with podman.

Ansibilizing the server

Ansible facts HOME =/= $HOME

I was having ansible run some things as another user, and using something like this to get the home directory of the user it was running as:

- name: Do podmaner container

become: true

become_user: "{{ rathole_user }}"

block:

- name: Create rathole config

ansible.builtin.file:

path: "{{ lookup('ansible.builtin.env', 'HOME') }}/rathole/config"

state: directory

mode: '0775'But this errors. Despite rathole_user being podmaner, the error is something like:

TASK [rathole : Create rathole config] *******************************************************************************************

fatal: [moonstack]: FAILED! => {"changed": false, "msg": "There was an issue creating /home/moonpie/rathole as requested: [Errno 13] Permission denied: b'/home/moonpie/rathole'", "path": "/home/moonpie/rathole/config"}This might be because, when executing one off commands, sudo does not keep environment variables

moonpie@thoth:~$ sudo -iu podmaner echo $HOME

/home/moonpieInterestingly enough, using the $HOME environment variable works though.

- name: Do podmaner container

become: true

become_user: "{{ rathole_user }}"

block:

- name: Get home

ansible.builtin.command: "echo $HOME"

register: homepath

- name: Print current home

ansible.builtin.debug:

msg: "{{ homepath.stdout }}"TASK [rathole : Print current home] **********************************************************************************************

ok: [moonstack] => {

"msg": "/home/podmaner"

}Oh, I figured it out. After a quick google, this is because this lookup runs on the control node, rather than the server being managed.

From the ansible docs: “query the environment variables available on the controller”

I also decided to try ansible_facts['env']['HOME'], but that still outpus /home/moonpie. It seems that facts are gathered using hte user ansible initially logs in as.

ansible_env['HOME'] doesn’t work either, probably for the same reason.

This was a fun little tangent, I think I am going to return to using $HOME and other environment variables. Since things like podman volumes can’t simply be fed environment variables, I have to echo $HOME to get it first, and then save it to stdout. It feels like there should be a cleaner way to do this, but this does work.

Ansible Podman Healthchecks

I think I found some undocumented behavior. I was attempting to adapt the authentik docker compose healthchecks to be configured via ansible and podman instead.

The ansible podman_container docs have a section on healthchecks.

The official docs say this is correct:

- name: Authentik postgres database

containers.podman.podman_container:

name: authentik_postgres

pod: authentik_pod

image: "moonpiedumplings/authentik_postgres:12"

healthcheck: "CMD-SHELL pg_isready -d $${POSTGRES_DB} -U $${POSTGRES_USER}"

healthcheck_retries: 5

healthcheck_start_period: 20sAnd it is. But I attempted to copy and paste the official authentik docker-compose for this anyway, to see if it worked:

- name: Authentik Redis database

containers.podman.podman_container:

name: authentik_redis

pod: authentik_pod

image: docker.io/library/redis:alpine

command: --save 60 1 --loglevel warning

healthcheck:

test: "CMD-SHELL redis-cli ping | grep PONG"

start_period: 20s

interval: 30s

retries: 5

timeout: 3sAnd I found that this actually still worked.

podmaner@thoth:~$ podman inspect authentik_redis

...

....

"--healthcheck-command",

"{'test': 'CMD-SHELL redis-cli ping | grep PONG', 'start_period': '20s', 'interval': '30s', 'retries': 5, 'timeout': '3s'}",

...

...I find this interesting that it works despite not being documented. Should I rely on this behavior? I like the way it looks, as it’s much more readable than the _underscore format for these particular variables.

Ansible vault

If I am going to use rathole, then I need to deploy a config file with a client and server secrets. Although an extra step of complexity, thankfully, ansible makes handling secrets easy, with ansible vault.

Main docs here: https://docs.ansible.com/ansible/latest/vault_guide/index.html

Docs on handling vault passwords

I only need a few variables encrypted, so maybe:

Docs on encrypting individual variables

Or I could encrypt a file with the variables I want and add them? :

I opted for the only encrypting certain variables within my inventory file, using a single password.

Service deployment

All of this will be ansibilized, ideally.

Rathole

In addition to the build above, I used a template to deply the rathole container, in case I want more than just http or https on my server (minecraft?).

For example, here is the “client” code:

client.toml.j2

{% for service in rathole_services %}

[client.services.{{ service.name }}]

token = "{{ rathole_secret }}"

local_addr = "{{ service.local_addr }}"

{% endfor %}Using ansible’s ability to do for loops. The server config has something similar.

Caddy

I have a simple caddyfile, right now, but it doesn’t work:

Caddyfile.j2

http://test.moonpiedumpl.ing {

reverse_proxy * http://localhost:8000

}I need to expand this. I dislike how Caddy only takes a config file, and there isn’t something like a premade docker container that takes environment variables, but it is what it is.

For a jinja2 template, something like this:

Caddyfile.j2

{% for service in caddy_services %}

{{ service.domain_name }} {

{% if service.port is defined %}

reverse_proxy http://127.0.0.1:{{ service.port }}

{% if service.request_body is defined %}

request_body {

max_size 2048MB

}

{% endif %}

{% endif %}

{% if service.file_root is defined %}

root * {{ service.file_root }}

file_server

{% endif %}

}

{% endfor %}And then the caddy role would take these hosts:

- role: caddy

vars:

caddy_services:

- domain_name: "test.moonpiedumpl.ing"

port: 8000 # This is where the python http server runs by default, for my tests.This almost worked, but I got an error in the caddy logs

dial tcp 127.0.0.1:8000: connect: connection refusedThe issue is that, unlike docker containers, podman containers have their own network interface. Attempting to curl localhost on them would result in the wrong network interface being accessed.

Also, I need to add the trusted_proxies option, otherwise caddy will not report the real ip address of the client.

I adjusted the podman container to not forward any ports, and instead to be in “host” networking mode, where it shares the interfaces with the host.

Caddyfile.j2

{

http_port {{ caddy_http_port }}

https_port {{ caddy_https_port }}

{% if caddy_trusted_proxies | length > 0 %}

trusted_proxies static {{ caddy_trusted_proxies | join(' ') }}

{% endif %}

}Except this errors. Caddy complains: Error: adapting config using caddyfile: /etc/caddy/Caddyfile:4: unrecognized global option: trusted_proxies and the container dies.

Also, the generated file looks really weird:

Caddyfile

{

http_port 8080

https_port 8443

trusted_proxies staic 154.12.245.181

}

test.moonpiedumpl.ing {

reverse_proxy http://127.0.0.1:8000

}The spacing is off, and that might be the reason why it errors?

I corrected the jinja2 spacing according to it’s docs, and also corrected the caddy config. trusted_proxies needs to be placed inside a servers block for it to be a global option, or inside each of the sites, if you want to make it site specific.

The corrected generated config:

Caddyfile

{

http_port 8080

https_port 8443

servers {

trusted_proxies static 154.12.245.181

}

}

test.moonpiedumpl.ing {

reverse_proxy http://127.0.0.1:8000

}However, this does not work. I only get:

[moonpie@lizard moonpiedumplings.github.io]$ curl test.moonpiedumpl.ing

curl: (52) Empty reply from serverThe caddy logs do not show anything relevant. I also did an additional test, by running a podman alpine container with host networking, where it was able to curl localhost just fine.

It is interesting, how Caddy returns an “empty reply”, rather than what I am expecting, a “502 Bad Gateway” — that’s the reply that you usually get when the backend server is down, which it was in a lot of my testing.

So I throw in a quick “Hello World”

hello.moonpiedumpl.ing {

respond "Hello World!"

}And still get an empty reply.

The caddy logs, however, do say soemthign interesting:

Show Logs

{"level":"info","ts":1707778344.991714,"logger":"http.auto_https","msg":"server is listening only on the HTTPS port but has no TLS connection policies; adding one to enable TLS","server_name":"srv0","https_port":8443}

.....

....

.....

{"level":"info","ts":1707778344.991727,"logger":"http.auto_https","msg":"enabling automatic HTTP->HTTPS redirects","server_name":"srv0"}

{"level":"error","ts":1708452118.3423748,"logger":"http.acme_client","msg":"validating authorization","identifier":"hello.moonpiedumpl.ing","problem":{"type":"urn:ietf:params:acme:error:connection","title":"","detail":"154.12.245.181: Fetching http://hello.moonpiedumpl.ing/.well-known/acme-challenge/1qnsZcobKUGtdEG2KON-FeFwio97TFxWCu7hqXSY89s: Error getting validation data","instance":"","subproblems":[]},"order":"https://acme-v02.api.letsencrypt.org/acme/order/1560639027/246082250997","attempt":2,"max_attempts":3}

{"level":"error","ts":1708452118.3424225,"logger":"tls.obtain","msg":"could not get certificate from issuer","identifier":"hello.moonpiedumpl.ing","issuer":"acme-v02.api.letsencrypt.org-directory","error":"HTTP 400 urn:ietf:params:acme:error:connection - 154.12.245.181: Fetching http://hello.moonpiedumpl.ing/.well-known/acme-challenge/1qnsZcobKUGtdEG2KON-FeFwio97TFxWCu7hqXSY89s: Error getting validation data"}

{"level":"warn","ts":1708452118.3426523,"logger":"http","msg":"missing email address for ZeroSSL; it is strongly recommended to set one for next time"}

{"level":"info","ts":1708452119.2906954,"logger":"http","msg":"generated EAB credentials","key_id":"N8YqjedBRmclRYYFjYqZGw"}

{"level":"info","ts":1708452136.0253348,"logger":"http","msg":"waiting on internal rate limiter","identifiers":["hello.moonpiedumpl.ing"],"ca":"https://acme.zerossl.com/v2/DV90","account":""}

{"level":"info","ts":1708452136.0253737,"logger":"http","msg":"done waiting on internal rate limiter","identifiers":["hello.moonpiedumpl.ing"],"ca":"https://acme.zerossl.com/v2/DV90","account":""}

{"level":"info","ts":1708452143.7124622,"logger":"http.acme_client","msg":"trying to solve challenge","identifier":"hello.moonpiedumpl.ing","challenge_type":"http-01","ca":"https://acme.zerossl.com/v2/DV90"}

{"level":"error","ts":1708452153.466225,"logger":"http.acme_client","msg":"challenge failed","identifier":"hello.moonpiedumpl.ing","challenge_type":"http-01","problem":{"type":"","title":"","detail":"","instance":"","subproblems":[]}}

{"level":"error","ts":1708452153.4662635,"logger":"http.acme_client","msg":"validating authorization","identifier":"hello.moonpiedumpl.ing","problem":{"type":"","title":"","detail":"","instance":"","subproblems":[]},"order":"https://acme.zerossl.com/v2/DV90/order/mlTQtXTaA_eyy7PFDbdZSg","attempt":1,"max_attempts":3}

{"level":"error","ts":1708452153.4663181,"logger":"tls.obtain","msg":"could not get certificate from issuer","identifier":"hello.moonpiedumpl.ing","issuer":"acme.zerossl.com-v2-DV90","error":"HTTP 0 - "}

{"level":"error","ts":1708452153.466387,"logger":"tls.obtain","msg":"will retry","error":"[hello.moonpiedumpl.ing] Obtain: [hello.moonpiedumpl.ing] solving challenge: hello.moonpiedumpl.ing: [hello.moonpiedumpl.ing] authorization failed: HTTP 0 - (ca=https://acme.zerossl.com/v2/DV90)","attempt":1,"retrying_in":60,"elapsed":41.717184567,"max_duration":2592000}It seems like the certificate challenge fails. If caddy is doing auto https redirects, then it makes sense that the reply is empty.

And curling https?

[moonpie@lizard server-configs]$ curl https://hello.moonpiedumpl.ing

curl: (35) OpenSSL SSL_connect: SSL_ERROR_SYSCALL in connection to hello.moonpiedumpl.ing:443If caddy isn’t serving on https properly, then it makes sense that this fails.

Later, I think I discover the issue:

podmaner@thoth:~/rathole$ podman ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

868115b4e818 docker.io/moonpiedumplings/rathole:latest rathole --client ... 2 weeks ago Up 7 minutes ago rathole

860ec72e5c78 docker.io/library/caddy:latest caddy run --confi... 2 weeks ago Up 7 minutes ago 0.0.0.0:8080->80/tcp, 0.0.0.0:8443->443/tcp caddyWhy is Caddy forwarding ports? My ansible playbooks set the podman networking to be host networking. It seems that, when rerunning a podman container using ansible, it doesn’t properly destroy it, leaving some lingering configs.

However, I get an error:

podmaner@thoth:~$ podman logs caddy

...

...

{"level":"info","ts":1709764202.9553177,"logger":"tls.issuance.acme","msg":"waiting on internal rate limiter","identifiers":["hello.moonpiedumpl.ing"],"ca":"https://acme-v02.api.letsencrypt.org/directory","account":"moonpiedumplings2@gmail.com"}

{"level":"info","ts":1709764202.955384,"logger":"tls.issuance.acme","msg":"done waiting on internal rate limiter","identifiers":["hello.moonpiedumpl.ing"],"ca":"https://acme-v02.api.letsencrypt.org/directory","account":"moonpiedumplings2@gmail.com"}

{"level":"info","ts":1709764202.9641545,"logger":"tls.issuance.acme","msg":"waiting on internal rate limiter","identifiers":["test.moonpiedumpl.ing"],"ca":"https://acme-v02.api.letsencrypt.org/directory","account":"moonpiedumplings2@gmail.com"}

{"level":"info","ts":1709764202.964186,"logger":"tls.issuance.acme","msg":"done waiting on internal rate limiter","identifiers":["test.moonpiedumpl.ing"],"ca":"https://acme-v02.api.letsencrypt.org/directory","account":"moonpiedumplings2@gmail.com"}

{"level":"info","ts":1709764203.0985403,"logger":"tls.issuance.acme","msg":"waiting on internal rate limiter","identifiers":["sso.moonpiedumpl.ing"],"ca":"https://acme-v02.api.letsencrypt.org/directory","account":"moonpiedumplings2@gmail.com"}

{"level":"info","ts":1709764203.0985787,"logger":"tls.issuance.acme","msg":"done waiting on internal rate limiter","identifiers":["sso.moonpiedumpl.ing"],"ca":"https://acme-v02.api.letsencrypt.org/directory","account":"moonpiedumplings2@gmail.com"}

{"level":"info","ts":1709764203.2928238,"logger":"tls.issuance.acme.acme_client","msg":"trying to solve challenge","identifier":"test.moonpiedumpl.ing","challenge_type":"tls-alpn-01","ca":"https://acme-v02.api.letsencrypt.org/directory"}

{"level":"info","ts":1709764203.434616,"logger":"tls.issuance.acme.acme_client","msg":"trying to solve challenge","identifier":"sso.moonpiedumpl.ing","challenge_type":"tls-alpn-01","ca":"https://acme-v02.api.letsencrypt.org/directory"}

{"level":"error","ts":1709764203.478285,"logger":"tls.obtain","msg":"could not get certificate from issuer","identifier":"test.moonpiedumpl.ing","issuer":"acme-v02.api.letsencrypt.org-directory","error":"[test.moonpiedumpl.ing] solving challenges: presenting for challenge: presenting with embedded solver: could not start listener for challenge server at :443: listen tcp :443: bind: permission denied (order=https://acme-v02.api.letsencrypt.org/acme/order/1605766427/250173951227) (ca=https://acme-v02.api.letsencrypt.org/directory)"}And more of the same.

So apparently, Caddy doesn’t automatically do the acme challenge on the http_port or https_port, and you have to explicitly specify an alternate port:

sso.moonpiedumpl.ing {

reverse_proxy http://127.0.0.1:9000

tls {

issuer acme {

alt_http_port 8080

alt_tlsalpn_port 8443

}

}

}And of course, this is templated as part of my Caddyfile:

{% for service in caddy_services %}

{{ service.domain_name }} {

{% if service.port is defined %}

reverse_proxy http://127.0.0.1:{{ service.port }}

{% if service.request_body is defined %}

request_body {

max_size 2048MB

}

{% endif %}

{% endif %}

{% if service.file_root is defined %}

root * {{ service.file_root }}

file_server

{% endif %}

tls {

issuer acme {

alt_http_port {{ caddy_http_port }}

alt_tlsalpn_port {{ caddy_https_port }}

}

}

}

{% endfor %}But with this, Caddy finally works properly.

I saw another interesting thing: caddy security module (github), which lets you integrate caddy with ldap or other forms of authentication. I want to inegrate this, because it would be better to have auth built into the single sign on.

#jinja2:lstrip_blocks: True

FROM docker.io/library/caddy:{{ caddy_version }}-builder AS builder

RUN xcaddy build {% for plugin in caddy_plugins %}--with {{ plugin }}{% endfor %}

FROM docker.io/library/caddy:{{ caddy_version }}

COPY --from=builder /usr/bin/caddy /usr/bin/caddyWith this, I have the caddy_security module installed.

Configuring it, is much, much harder.

I started with copying the existing example Caddyfile they give:

Authentik

The listen settings are here: https://goauthentik.io/docs/installation/configuration/#listen-settings

So the podman pod which runs all of authentik will have those ports forwarded.

- name: Create relevant podman pod

containers.podman.podman_pod:

name: authentik_pod

state: present

ports:

- "9000:9000"

- "9443:9443"

- "3389:3389"

- "6636:3389"I designed a role, and deployment was going pretty smoothly. Until it errored:

failed: [moonstack] (item=postgres) => {

"ansible_loop_var": "item",

"changed": false,

"item": "postgres",

"module_stderr": "Shared connection to 192.168.17.197 closed.",

"module_stdout": "

Traceback (most recent call last):

File \"/var/tmp/ansible-tmp-1708731694.1592944-80284-196261000245469/AnsiballZ_file.py\", line 107, in <module>

_ansiballz_main()

File \"/var/tmp/ansible-tmp-1708731694.1592944-80284-196261000245469/AnsiballZ_file.py\", line 99, in _ansiballz_main

invoke_module(zipped_mod, temp_path, ANSIBALLZ_PARAMS)

File \"/var/tmp/ansible-tmp-1708731694.1592944-80284-196261000245469/AnsiballZ_file.py\", line 47, in invoke_module

runpy.run_module(mod_name='ansible.modules.file', init_globals=dict(_module_fqn='ansible.modules.file', _modlib_path=modlib_path),

File \"<frozen runpy>\", line 226, in run_module

File \"<frozen runpy>\", line 98, in _run_module_code

File \"<frozen runpy>\", line 88, in _run_code

File \"/tmp/ansible_ansible.builtin.file_payload_37zf8n_k/ansible_ansible.builtin.file_payload.zip/ansible/modules/file.py\", line 987, in <module>

File \"/tmp/ansible_ansible.builtin.file_payload_37zf8n_k/ansible_ansible.builtin.file_payload.zip/ansible/modules/file.py\", line 973, in main

File \"/tmp/ansible_ansible.builtin.file_payload_37zf8n_k/ansible_ansible.builtin.file_payload.zip/ansible/modules/file.py\", line 680, in ensure_directory

File \"/tmp/ansible_ansible.builtin.file_payload_37zf8n_k/ansible_ansible.builtin.file_payload.zip/ansible/module_utils/basic.py\", line 1159, in set_fs_attributes_if_different

File \"/tmp/ansible_ansible.builtin.file_payload_37zf8n_k/ansible_ansible.builtin.file_payload.zip/ansible/module_utils/basic.py\", line 919, in set_mode_if_different

PermissionError: [Errno 1] Operation not permitted: b'/home/podmaner/authentik/postgres'",

"msg": "MODULE FAILURE\nSee stdout/stderr for the exact error",

"rc": 1

}Which is… weird. Looking at the ansible code that generates this:

- name: Create relevant configuration files

ansible.builtin.file:

path: "{{ homepath.stdout }}/authentik/{{ item }}"

state: directory

mode: '0755'

loop: ["media", "custom-templates", "certs", "postgres", "redis"]It’s the same exact task, looped through… but it only errors for /home/podmaner/authentik/postgres ‽

podmaner@thoth:~/authentik$ ls -la

total 28

drwxr-xr-x 7 podmaner podmaner 4096 Feb 23 15:37 .

drwx------ 9 podmaner podmaner 4096 Feb 23 15:37 ..

drwxr-xr-x 2 podmaner podmaner 4096 Feb 23 15:37 certs

drwxr-xr-x 2 podmaner podmaner 4096 Feb 23 15:37 custom-templates

drwxr-xr-x 2 podmaner podmaner 4096 Feb 23 15:37 media

drwx------ 2 165605 podmaner 4096 Feb 23 15:37 postgres

drwxr-xr-x 2 166534 podmaner 4096 Feb 23 15:39 redisFor some reason, only the postgres directory has incorrect permissions?

And the weirdest part: This only happens upon a rerun of the role. If I delete the /home/podmaner/authentik/ directory, and run the role, then everything runs properly, and the postgres directory gets the proper permissions.

Why does this happen? My intuition is telling me this is a bug, since the behavior is so blatantly inconsistent.

It’s not really that though. After deleting the postgres directory, it was recreated.

podmaner@thoth:~/authentik$ podman logs authentik_postgres

Error: Database is uninitialized and superuser password is not specified.

You must specify POSTGRES_PASSWORD to a non-empty value for the

superuser. For example, "-e POSTGRES_PASSWORD=password" on "docker run".

You may also use "POSTGRES_HOST_AUTH_METHOD=trust" to allow all

connections without a password. This is *not* recommended.

See PostgreSQL documentation about "trust":

https://www.postgresql.org/docs/current/auth-trust.htmlWhoops. Lol. I was attempting to convet authentik’s docker-compose.yml to podman+ansible instructions, but my copy was incomplete, and I forgot to configure the postgres container properly.

That explains why the container won’t start… but it still doesn’t explain the file permissions error.

After taking a closer look at the output of ls -la, it seems that the podman containers change ownership of the files. Why doesn’t it do this for any of my other containers?

I’m guessing this is because the postgres and redis container, unlike every other container, have the main process inside as a different user other than root.

I looked into podman user namespaces. I tried setting user namespaces for the relevant containers, first, but I got an error:

TASK [authentik : Authentik postgres database] ***********************************************************************************************************************

fatal: [moonstack]: FAILED! => {"changed": false, "msg": "Can't run container authentik_postgres", "stderr": "Error: --userns and --pod cannot be set together\n", "stderr_lines": ["Error: --userns and --pod cannot be set together"], "stdout": "", "stdout_lines": []}So instead, I set the usernamespace for the whole pod, and it didn’t erorr:

- name: Create relevant podman pod

containers.podman.podman_pod:

name: authentik_pod

recreate: trueIt seems like the error is that, the podmaner user would no longer have write permissions, preventing it from doing anything to those specific files.

However, the podman container still died.

Show error

podmaner@thoth:~$ podman logs authentik_worker

{"event": "Loaded config", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708731540.8134813, "file": "/authentik/lib/default.yml"}

{"event": "Loaded environment variables", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708731540.813802, "count": 5}

{"event": "Starting authentik bootstrap", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708731540.8138793}

{"event": "----------------------------------------------------------------------", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708731540.8139021}

{"event": "Secret key missing, check https://goauthentik.io/docs/installation/.", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708731540.8139138}

{"event": "----------------------------------------------------------------------", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708731540.8139226}

{"event": "Loaded config", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708807909.1802225, "file": "/authentik/lib/default.yml"}

{"event": "Loaded environment variables", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708807909.1805325, "count": 5}

{"event": "Starting authentik bootstrap", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708807909.1806104}

{"event": "----------------------------------------------------------------------", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708807909.180633}

{"event": "Secret key missing, check https://goauthentik.io/docs/installation/.", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708807909.1806445}

{"event": "----------------------------------------------------------------------", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708807909.1806536}

{"event": "Loaded config", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817283.6261683, "file": "/authentik/lib/default.yml"}

{"event": "Loaded environment variables", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817283.6264079, "count": 5}

{"event": "Starting authentik bootstrap", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708817283.6264803}

{"event": "----------------------------------------------------------------------", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708817283.6265018}

{"event": "Secret key missing, check https://goauthentik.io/docs/installation/.", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708817283.626513}

{"event": "----------------------------------------------------------------------", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708817283.6265216}

podmaner@thoth:~$ podman logs authentik_server

{"event": "Loaded config", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817279.5668721, "file": "/authentik/lib/default.yml"}

{"event": "Loaded environment variables", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817279.567172, "count": 5}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6441257, "path": "authentik.admin.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.644982, "path": "authentik.crypto.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6455739, "path": "authentik.events.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6467671, "path": "authentik.outposts.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6484737, "path": "authentik.policies.reputation.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.651618, "path": "authentik.providers.scim.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6528664, "path": "authentik.sources.ldap.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6535037, "path": "authentik.sources.oauth.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6540685, "path": "authentik.sources.plex.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6560044, "path": "authentik.stages.authenticator_totp.settings"}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.6598718, "path": "authentik.blueprints.settings"}

{"event": "Booting authentik", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708817281.6600318, "version": "2023.10.7"}

{"event": "Enabled authentik enterprise", "level": "info", "logger": "authentik.lib.config", "timestamp": 1708817281.661141}

{"event": "Loaded app settings", "level": "debug", "logger": "authentik.lib.config", "timestamp": 1708817281.661508, "path": "authentik.enterprise.settings"}

{"event": "Loaded GeoIP database", "last_write": 1706549214.0, "level": "info", "logger": "authentik.events.geo", "pid": 2, "timestamp": "2024-02-24T23:28:02.805111"}

{"app_name": "authentik.crypto", "event": "Failed to run reconcile", "exc": "ProgrammingError('relation \"authentik_crypto_certificatekeypair\" does not exist\\nLINE 1: ...hentik_crypto_certificatekeypair\".\"key_data\" FROM \"authentik...\\n ^')", "level": "warning", "logger": "authentik.blueprints.apps", "name": "managed_jwt_cert", "pid": 2, "timestamp": "2024-02-24T23:28:03.610865"}

{"app_name": "authentik.crypto", "event": "Failed to run reconcile", "exc": "ProgrammingError('relation \"authentik_crypto_certificatekeypair\" does not exist\\nLINE 1: SELECT 1 AS \"a\" FROM \"authentik_crypto_certificatekeypair\" W...\\n ^')", "level": "warning", "logger": "authentik.blueprints.apps", "name": "self_signed", "pid": 2, "timestamp": "2024-02-24T23:28:03.612779"}

{"app_name": "authentik.outposts", "event": "Failed to run reconcile", "exc": "ProgrammingError('relation \"authentik_outposts_outpost\" does not exist\\nLINE 1: ..._id\", \"authentik_outposts_outpost\".\"_config\" FROM \"authentik...\\n ^')", "level": "warning", "logger": "authentik.blueprints.apps", "name": "embedded_outpost", "pid": 2, "timestamp": "2024-02-24T23:28:03.647866"}

{"event": "Task published", "level": "info", "logger": "authentik.root.celery", "pid": 2, "task_id": "d28b9ddc12eb44eaa99a25dfe3477f37", "task_name": "authentik.blueprints.v1.tasks.blueprints_discovery", "timestamp": "2024-02-24T23:28:03.876853"}

{"event": "Task published", "level": "info", "logger": "authentik.root.celery", "pid": 2, "task_id": "763c497b4e4c43e2bb745de6a613e104", "task_name": "authentik.blueprints.v1.tasks.clear_failed_blueprints", "timestamp": "2024-02-24T23:28:03.878293"}

{"app_name": "authentik.core", "event": "Failed to run reconcile", "exc": "ProgrammingError('relation \"authentik_core_source\" does not exist\\nLINE 1: ...\"authentik_core_source\".\"user_matching_mode\" FROM \"authentik...\\n ^')", "level": "warning", "logger": "authentik.blueprints.apps", "name": "source_inbuilt", "pid": 2, "timestamp": "2024-02-24T23:28:03.881205"}\It seems I forgot another environment variable, which has been documented on authentik’s site.

I did that, and it still dies.

Investigating the error, it seems to be something about relevant file permissions, it is unable to create the necessary file.

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "/lifecycle/migrate.py", line 98, in <module>

migration.run()

File "/lifecycle/system_migrations/tenant_files.py", line 15, in run

TENANT_MEDIA_ROOT.mkdir(parents=True)

File "/usr/local/lib/python3.12/pathlib.py", line 1311, in mkdir

os.mkdir(self, mode)

PermissionError: [Errno 13] Permission denied: '/media/public'I need to do more research into how rootless podman, and the different userns modes, interact with volumes.

I found one article, but it’s focus isn’t quite what I want, and it lacks overview of the different userns modes, and how they interact with volume mounts.

I spent some time experimenting, and did some more research. Something crucial was a comment I noticed in the docker-compose, under the section related to the “worker” node of authentik:

# `user: root` and the docker socket volume are optional.

# See more for the docker socket integration here:

# https://goauthentik.io/docs/outposts/integrations/docker

# Removing `user: root` also prevents the worker from fixing the permissions

# on the mounted folders, so when removing this make sure the folders have the correct UID/GID

# (1000:1000 by default)So apparently, authentik expects to exist as user 1000 and group 1000, and not anything else. Thankfully, podman has a special userns mode, to map a UID/GID inside the container to a different host user:

- name: Create relevant podman pod

containers.podman.podman_pod:

name: authentik_pod

recreate: true

# userns: host

userns: keep-id:uid=1000,gid=1000

state: started

......After this, it works. But I’m still not satisfied. Postgres keeps on changing the permissions of it’s own bind mount.

According to the docker library page for postgres (from Arbitrary –user Notes):

The main caveat to note is that postgres doesn’t care what UID it runs as (as long as the owner of /var/lib/postgresql/data matches), but initdb does care (and needs the user to exist in /etc/passwd):

It then offers three ways to get around this, and one way is to use the --user flag, in docker. Red Hat has an article mentioning that this exists in podman as well. It didn’t work.

I did some more research into why it is not possible for there to be a different usernamespace mapping for each container in a pod, and the short version is that containers within a pod need to be in the same user namespace in order for them to interact with eachother properly, like networking.

I made a Dockerfile for postgres, adding the authentik user to the container:

FROM docker.io/library/postgres:12-alpine

RUN echo "authentik:x:1000:1000::/authentik:/usr/sbin/nologin" > /etc/passwdIt still chowns/chmods the whole directory to be 700, but that’s okay. Apparently, this is intended behavior, hardcoded, and postgres refuses to start if file permissions are not 700. It’s required that the user running postgres, exclusively owns the postgres data folders.

But now file permissions are correct at least, and the user in the container is mapped to the user outside the container, enabling the directory to be manipulated properly (backed up, restored, etc) outside of the container.

However, I still get another error. Even with a fresh authentik deployment, the authentik_worker container gives an error:

Traceback (most recent call last):

File "/lifecycle/migrate.py", line 98, in <module>

migration.run()

File "/lifecycle/system_migrations/tenant_to_brand.py", line 25, in run

self.cur.execute(SQL_STATEMENT)

File "/ak-root/venv/lib/python3.12/site-packages/psycopg/cursor.py", line 732, in execute

raise ex.with_traceback(None)

psycopg.errors.DuplicateTable: relation "authentik_brands_brand" already exists

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "/lifecycle/migrate.py", line 118, in <module>

release_lock(curr)

File "/lifecycle/migrate.py", line 67, in release_lock

cursor.execute("SELECT pg_advisory_unlock(%s)", (ADV_LOCK_UID,))

File "/ak-root/venv/lib/python3.12/site-packages/psycopg/cursor.py", line 732, in execute

raise ex.with_traceback(None)

psycopg.errors.InFailedSqlTransaction: current transaction is aborted, commands ignored until end of transaction blockI think this is because there is no container dependecies or the like set up, so the authentik_worker starts before postgres is finished doing it’s thing. To test, I deployed the databases first, and then started the worker and server containers. Sure enough, authentik then deploys properly, without erroring.

To get around this, I need to add dependencies to my containers. To do that, I need to use podman’s systemd integration. Since it’s not appropriate for this section (since it will apply to many other services), I am continuing in podman systemd integration

Configuration

Yeah, there’s a lot of stuff to configure. Single-Sign-On is very, very complex.

I started out by adding a single user, my main user under Directory > Users, called “moonpiedumplings”.

This user is usable via

Applications

Invitations

Authentik has a feature called “invitations”, where it can generate a one or multiple time usable link that people can use to self register.

The goal here is that all my users are stored within authentik, and no services have any local users. I can use invites to

Here are the docs on invitations: https://docs.goauthentik.io/docs/user-group-role/user/invitations

Teraform

Authentik has a terraform provider: https://registry.terraform.io/providers/goauthentik/authentik/latest/docs

But I don’t think I’m going to use it, as that’s yet another layer of complexity.

Forgejo

I started by finding forgejo’s docker installation guides: https://forgejo.org/docs/next/admin/installation-docker/

However, there is another problem:

Although forgejo supports rootless, it seems to expect a userid and groupid of 1000:1000, which is not the user I am running it as. Similar to Authentik, I will have to create the pod with userns mapping.

I basically copied the Authentik deployment, and got it deployed.

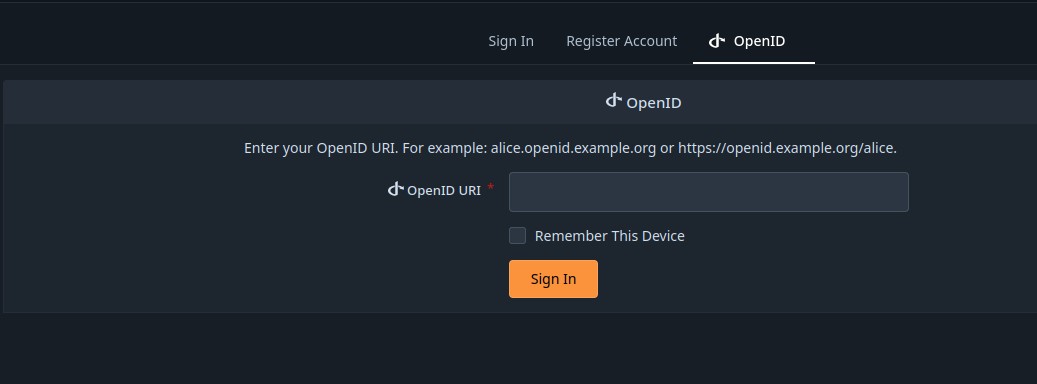

So it seems that, when forgejo is first created, the initial user that registrates will be the “admin” user, able to administrate the site. I can’t add the oauth2 from the openid.

I created an admin account, and am given an interface to add an oauth2 provider, but I’m wondering if there is some way to do this via configuration files, instead of from the GUI.

Bitwarden/Vaultwarden

I want to self host a password manager, and I have selected vaultwarden, the open source version of bitwarden.

Like other services, I don’t want vaultwarden to have any of it’s own users, and instead to connect to Authentik via LDAP or Oauth2.

However, I quickly realized a problem: Password managers (when properly architectured), encrypt the password database via a master password… if Vaultwarden is authneticating users via LDAP, then how will it get the master password?

I found a few solutions

This seems more doable, but it doesn’t sync passwords, merely automates invites to vaultwarden.

I don’t understand how this software works fully yet, but it seems ot sync vaultwarden users with LDAP.

Here are some docs: https://bitwarden.com/help/ldap-directory/

This seems to a be better, but more complex to use. I think it syncs passwords as well, but I don’t know yet.

Presearch for future pieces

Kubernetes

()

podmaner@thoth:~$ podman kube generate authentik_pod

spec:

automountServiceAccountToken: false

containers:

....

....

hostUsers: false

hostname: authentik_pod

This isn’t quite right. hostUsers: false means to not use user namespaces, which I am using right now in podman. See the [kubernetes docs]

Openstack Notes

I will probably get to this later on.

Rather than trying to do an openstack native implemenation of a public ipv6 addresses for virtual machines on a private ip address, I can simply have my router set up a “private” ipv6 subnet, and then VPN (or an L2TP, which does not come with encryption). Then, I can do a 1 to 1 NAT, or something of the sort, but without any address translation. By putting VM’s on this subnet, I can give them public ipv6 addresses. This is simpler, and compatible with more than just openstack.

Something like this is definitely possible.

https://superuser.com/questions/887745/routing-a-particular-subnet-into-a-vpn-tunnel

https://superuser.com/questions/1162231/route-public-subnet-over-vpn